-

Notifications

You must be signed in to change notification settings - Fork 2

System_Overview

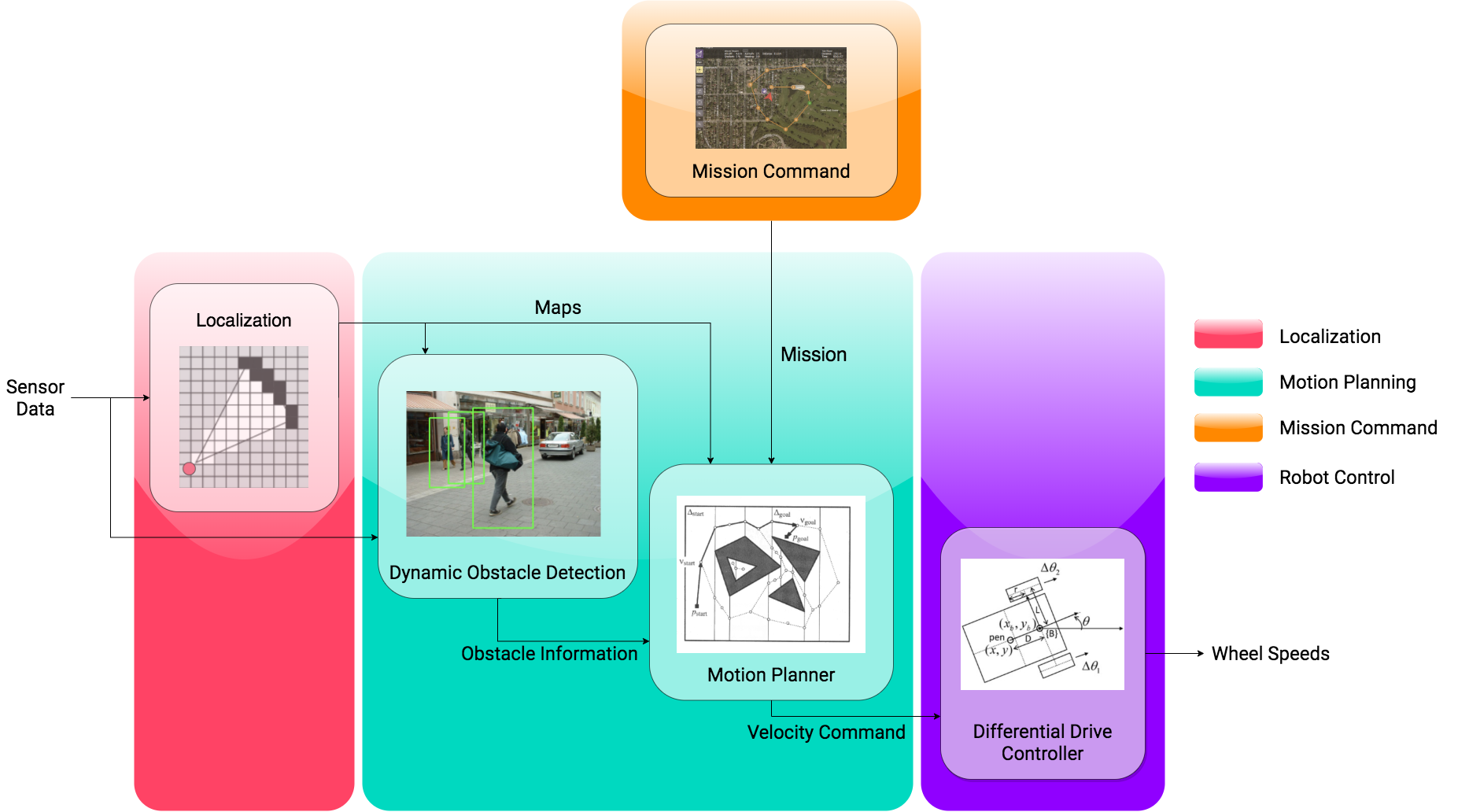

The autonomy of a system is a largely dependent on its "brain". That is why the system at a high level is most easily described by the basic software components of an autonomous mobile robot. Although hardware components of this system are very important, they are moreso a "means to an end" that give the system’s software access to the right information to make meaningful decisions in a safe way. This is why our entire system is designed around the basic software components that make up an autonomous ground vehicle. These components and their interactions are illustrated in the following figure. This architecture is derived from various previous autonomous mobile robot projects (ex. TIGRE (Martins et al. 2013) and CaRINA (Fernandes 2012)) and papers on autonomy: (Gobillot, Lesire, and Doose 2014; Littlefield et al. 2014; Becker, Helmle, and Pink 2016).

Proposed system software components

Proposed system software components

The diagram is color coded with the following categories:

Localization: This refers to the robot using sensor data to determine its location with respect to its environment (Huang and Dissanayake 2016). This also involves building a map of the environment it traverses through. This information is often represented in a 2D occupancy grid. For example, in the localization block, the red dot represents the robot location, the white represents free space, black represents an obstacle, and grey represents unexplored areas.

Motion Planning: This refers to the robot generating a path that moves the robot from its starting location to a goal without colliding with any obstacles (Burgard et al., n.d.). This achieved by using maps and obstacle information to generate a feasible trajectory and output velocity commands for the robot. For the sake of simplicity, we have grouped obstacle detection with motion planning, as this will drive the considerations we make throughout the document.

Mission Command: This refers to the user-facing software component that will allow for a mission to be created and transmitted to the robot. A typical mission will consist of waypoints that are points of interest for data collection. This mission is sent to the robot motion planner so the robot can create its goal locations accordingly.

Robot Control: This refers to taking a velocity command and generating the wheel speeds for the robot that will make the robot move in the desired direction at the desired speed.

These components are used in the rest of the document to categorize the different aspects of our proposed system. The cited projects (Martins et al. 2013; Fernandes 2012) use similar categories as us to describe their software. This is because each of the components have well-defined inputs and outputs that do not change regardless of the specific algorithms or packages that are used to implement the components. For example, localization will always output a map with the robot’s position, reglardless of the specific type of localization package used.

Dividing our project into these subsystems have helped us modularize our software and hardware as well as helped us evaluate our priorities. For example, it is useful to understand that the mission command must interface with the motion planning, but it is unconcerned with what localization is doing. Using these distinct categories we are able to define the selection criteria for their relevant hardware and software.

Our project has focused on the systems level design of the hardware and software components for Propbot’s autonomy package. In addition our contributions include the development of the key software components of the autonomy package, as well as the build up of a hollistic simulation environment to test software with.

More specifically our contributions are as follows:

Hardware

Evaluation of the legacy electro-mechanical system and proposing new electrical system components.

Evaluation the legacy sensor suite and selecting a new autonomy sensor suite.

Design of a comprehensive connection diagram outlining system integration, as well as sensor mounting guidelines for the robot.

Developing a vehicle interface firmware stack for interfacing with the drive system, the autonomy computer, and peripheral sensors.

Demonstration of the of tele-operation, close-range obstacle avoidance, and vehicle firmware on a surrogate robot (small amateur remote control car).

Software

Development of an autonomy package so a robot will safely follow a programmed route throughout campus.

The integration of sensors for situational awareness and position estimation that improve the robot’s safety and navigation features

Development of a mission command centre graphical user interface (GUI) package so that the user can configure a mission and monitor its progress remotely

Development of a simulation package which integrates the sensor suite, the Robot’s 3D model, and a custom simulation environment which mimics the robot’s operational zones in the real world.

It is important to note that with all autonomous systems, safety is of the utmost priority. The reason that "Safety" is not one of the categories illustrated in the above section is that safety is an important consideration across all subsystems. Throughout our design document, there are statements labelled "Safety Feature" that specifically highlight the safety considerations in certain design decisions. These decisions are explained in.

The following is a list of safety features and the page numbers on which they are mentioned:

Propbot is a six-wheel autonomous robot developed by UBC Radio Science Lab (RSL). The RSL conducts a lot of research on wireless systems, which requires a lot of wireless data measurement. Researchers can utilize the robot to deliver the data measurement equipment and avoid recording location data manually.

Currently, the robot is powered by two 36 V lithium ion batteries in parallel. The power of the batteries is sent to four DC/DC step-down converters and an emergency stop (E-stop) button. The six wheels are controlled by six independent brushless DC motors but only four of them receive power from the DC/DC converters.

The user can send commands to the vehicle interface, through Ethernet. After receiving those commands, the vehicle interface sends Pulse Width Modulation (PWM) signal to the wheel’s motors.

However, the electrical system is not currently safe or operational as several components including the DC/DC and brushless motors are not performing as expected and the wires connecting the system components can easily be loosened. These faults have led to the malfunction of the E-stop button and the vehicle drive module. For more information on the existing robot, please refer to the Current Robot State page.

This figure shows our proposed system at a high level and each component is described below.

High-level system architecture

High-level system architecture

Vehicle Interface

The vehicle comprises the mechanical system along with the motors, the motor drivers, vehicle interface/control module (which may be one or more device), RC link, e-stop, and the power system. The choice to separate the vehicle interface from autonomy computer is due to the following factors:

The autonomy computer will be processing all the situational awareness sensors and running the autonomy algorithms, which requires a lot of processing power. If the autonomy computer was responsible for the emergency stop and motor driving, the latency caused by the other processes would decrease the reaction time of the vehicle could cause collisions.

The vehicle interface will allow the remote controller to interface on the lowest level. This ensures that manual control is decoupled from the autonomy computer, which is not a Real Time Operating System, and thus does not have real time guarantees.

The close-range sensors should be able to halt the robot’s movement as quick as possible. Feeding the sensor network into the vehicle interface ensures that the triggering of the sensors will be prioritized. This also satisfies requirement EF5.1 for manual control.

The autonomy computer is not suitable for interfaces via GPIO.

The choice to use a dedicated vehicle interface ensures that the system that interfaces with the motors is real-time safe. Since the vehicle autonomy computer uses a Linux OS, it is not real-time safe and its processes could potentially fail during operation. Having a separate vehicle interface removes issues caused by software processes dying, latency, and low frequency updates from the autonomy software.

Close Range Sensors

As mentioned above, the close-range sensors are active even in manual mode because they are connected directly to the vehicle interface, thus bypassing the autonomy computer. This satisfies requirement EF5.1.

The choice to connect close-range sensors directly to the vehicle interface ensures that there is "last-minute" collision avoidance in the event of miscalculations by the autonomy stack or erroneous remote commands by the user. This ensures that on the lowest level, there is some form of automatic collision avoidance for small distances.

Sensors

These include the situational awareness and position estimation sensors which will provide the robot with an understanding of its surroundings and its current state. These sensors will be used for localization, motion planning, and robot control.

E-Stop

Note: this is not a power-off switch and does NOT cut power to any systems.

The E-Stop serves as an emergency stop for the vehicle. As shown, the emergency stopping capabilities can be activated by the vehicle controller. Once the E-stop button is pressed, the vehicle controller stops sending any PWM signal to the wheels’ motors and activates the brake inside each motor. But there is still a potential hazard. When the E-stop is pressed and the robot is on a downhill, the brakes in the motors can be engaged but the robot may keep slipping. Due to our time constraint, a mechanical brake system is necessary for the future capstone teams to implement on the robot.

Although the current E-stop is not guaranteed to fully stop the robot, especially when the robot is on a downhill surface, it is still useful on many surfaces, given that the batteries are able to supply enough current to the motors. An on-board E-stop allows road users (an important stakeholder for our project) to press the button on the robot itself if they notice unwanted behaviour. This concern is taken into account by project constraint C1.5 which limits the operation of the robot to flat terrain.

Power-Off Switch

For very extreme situations in which power to the system must be completely cut (power lockout), there is an existing switch that can sever the entire system’s connection to the battery bank.

This switch allows road users as well as the robot use to cut power to the robot in emergency cases if necessary.

Mission Command Centre

The robot reports its position and status to the mission command center via wireless link. The mission command center can be either a desktop at the Radio Science Lab or a laptop that a tester uses to monitor the robot, as long as the desktop or the laptop has Linux operating system. For our capstone team, the mission command center refers to the latter, but we still develop a software package that is compatible with the desktop at RSL. According to the mission command centre requirements in our Requirements Document, the wireless link between the robot and the mission command center allows for a limited set of commands: mission start, mission end, and mission upload.

Vehicle Autonomy Computer

The autonomy computer brings together the localization, motion planning, and control of the robot. The algorithms running on the computer take in the sensor information to generate its “world” and then makes motion decisions based off of the surroundings and the user-generated path. It is important to note, as listed in C1.3, the autonomy computer has already been chosen and acquired by the client. Thus, the autonomy computer is the NVIDIA Jetson TX1, and it is referenced in the following sections.