-

Notifications

You must be signed in to change notification settings - Fork 2

Software_Design

Our proposed software architecture heavily relies on our decision to use Robot Operating System (ROS) as our software framework.

The benefits of ROS are highlighted as follows (Fernandes 2012):

Allows for modular software design

Allows for easy integration of existing open-source packages.

Allows for collaboration with the international community

Allows software to be executed in a distributed system, thus balancing the computational requirements at different processing elements and PCs. This is especially relevant to the processing power limited by the Jetson TX1.

The alternative to this would integrating an existing message passing layer into a custom software stack. This would add considerable complexity and take more time to implement.

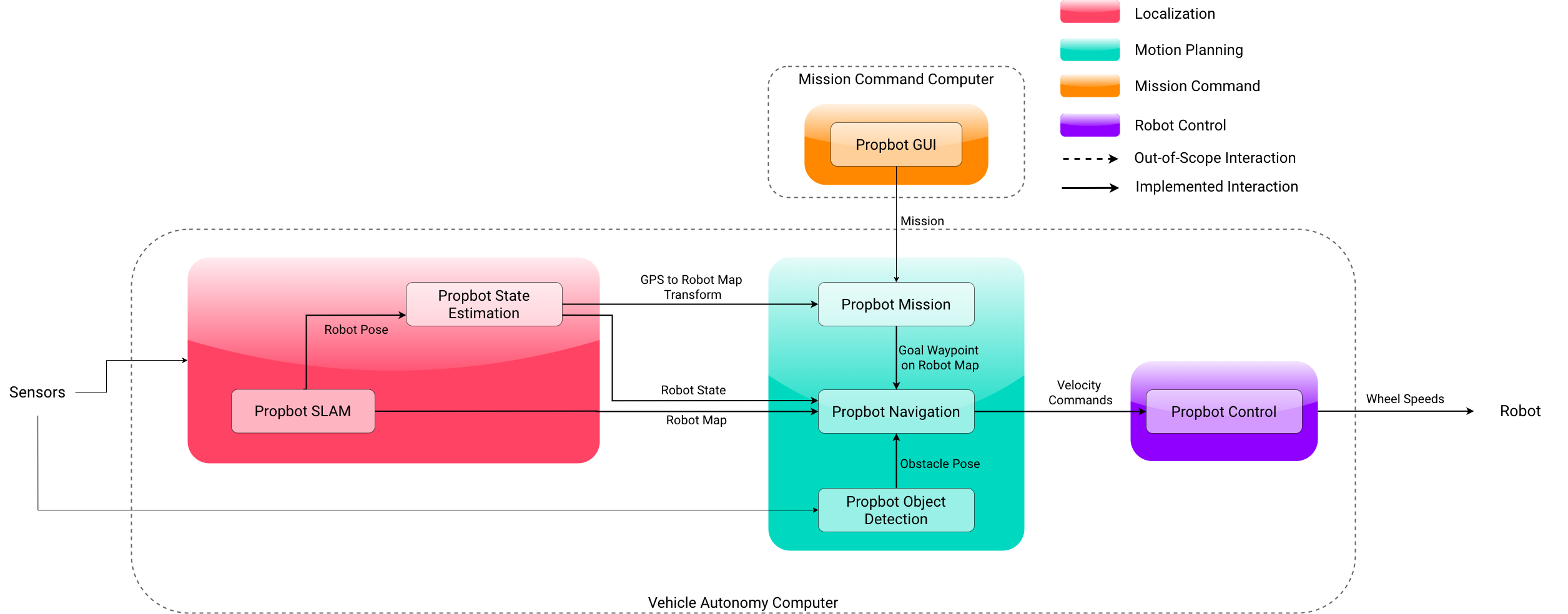

Propbot ROS package software architecture

Propbot ROS package software architecture

The proposed software architecture for our ROS packages is shown in the figure above. This figure highlights all of the custom Propbot packages that are added to carry out localization, motion planning, mission command, and robot control functions. It is important to note that the integration of the dynamic object detection is not completed, and was chosen to be completed in the future because it is difficult to integrate into a simulation environment. However, a brief overview of our standalone dynamic obstacle detection prototype is provided in the section called Propbot Object Detection. The packages were split up in this manner to modularize our software. This allows for future developers to easily switch out specific algorithms and libraries within the "Propbot" packages to improve the capabilities of the autonomy system. The software is modular and complies with the general ROS architecture in mind, so that the open source ROS SLAM, navigation, and control packages can be used.

The general functionality of the packages running on each computer (Mission Command and Vehicle Autonomy) are in the diagram are described below and their specific implementation details are described in the proceeding sections:

Mission Command Computer:

Propbot GUI: This package has the GUI that a user can use to create a mission and monitor the robot’s mission progress. This GUI will allow a user to set waypoints on a map, and send them to. In addition, it reads in "Robot Pose" (the robot’s position and orientation) from Propbot SLAM and displays it.

Vehicle Autonomy Computer:

Propbot Mission: This package takes in a mission and sends goals to Propbot Navigation. Using the "GPS to Robot Map Transform" from , it converts GPS coordinates specified in the mission to locations on the "Robot Map". It sends these as "Goal Waypoints" on the robot map to Propbot Navigation consecutively as each waypoint is reached. This package also has the ability to interface with Propbot GUI package to upload, start, and stop missions.

Propbot SLAM: This package houses the robot’s SLAM algorithm. The SLAM algorithm uses sensor data, specifically GPS, IMU, lidar, and the depth data from the RGB-D camera, to generate a map of the robot’s surroundings. This map identifies the robot’s location in the world, as well as the objects the robot sees around it. We refer to this as the "Robot Map" in the figure. The SLAM package outputs this map to Propbot Navigation to be used for motion planning. In addition, it outputs a transform between GPS coordinates to positions on the robot map to Propbot Mission.

Propbot Navigation: This package takes in a goal waypoint on the robot map and plans a feasible route for the robot. It plans a route that is free of collisions with obstacles, and it continuously updates this route plan as it received new information from Propbot SLAM. It then converts this route to velocity (speed and direction) commands and sends them to Propbot Control.

Propbot Control: This package takes in velocity commands and translates them to left and right wheel speeds to be sent to the robot.

Propbot State Estimation: This package accepts robot pose estimates from the SLAM algorithm as well as data from both situational awareness and positional sensors to compute the nonlinear state estimations for the robot moving in 3D space.

Propbot Object Detection: This package detects and tracks dynamic obstacles by computing their bounding boxes with the You Only Look Once (YOLO) and then projecting these bounding boxes into 3D space using laser scan data and an implementation based on a pinhole camera model.

In addition to the packages above, we have software for the vehicle interface as mentioned in Section and Section (described in Section) and software used to for simulation of the robot (described in Section). Our Propbot repository is split into setup, vehicle_autonomy, mission_command, simulation, vehicle_interface, directories to compartmentalize the software used on different processors.

For all decided software (except drivers), descriptions of the software package and the reasons for choosing it will be provided in the proceeding sections. Some components have still not been decided upon as they depend on hardware components or do not depend on the design of the rest of the system. Please refer to the Future Work page to see more information about undecided components.

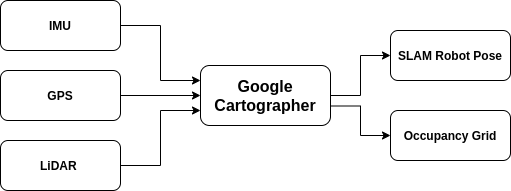

Final Choice: Google Cartographer

Selection Criteria

In order for PropBot to be useful to researchers, it needs to be able to record high accuracy location data. We have defined this accuracy to be 0.5m as the wireless technologies tested by the Radio Science Lab are sensitive to obstruction from the environment. This allows for the measurements made by the research payload to have accurate data about where measurements were taken. The following table outlines the required features of the localization software module and the figure shows an overview of our final choice.

Overview of SLAM with Google Cartographer

Overview of SLAM with Google Cartographer

| Required Feature | Justification |

|---|---|

| Position estimate based multiple input sensors, rather than just GPS. | According to the related tags, position data must be accurate to 0.5m (NF3.1) even in an interference zone (O6.5) where a GPS signal is not present. This means additional sensors such as wheel encoders, LiDAR, camera, and IMU should be inputs to the position estimate when GPS is not present or if GPS position is not accurate to 0.5m on its own. |

| Position estimate can be generated at least 1Hz. | Position estimate loop needs to be able to run at least 1Hz to ensure NF4.2 is satisfied. The localization module needs to be quick enough and able to handle the various sensor inputs on the Jetson TX1 (C1.3). |

Final Choice: robot_localization

Design

The propbot_state_estimation package utilizes components from the ROS robot_localization package to fuse a number of input sensor data and compute the nonlinear state estimations for robots moving in 3D space. Currently robot_localization is the only ROS package to provide an implementation of the Extended Kalman Filter(EKF). An overview of propbot_state_estimation package is provided in the figure . The package’s components are further described below:

EKF Localization (Odom): This state estimator node accepts a variety of continuous pose-related data and outputs a continuous robot state estimate that is locally accurate but is prone to drift over time. While this estimate cannot be used to determine the absolute state of the robot for global path planning, it is useful to the robot controller which uses this frequently updated pose information, to adjust the kinematic trajectory of the robot.

EKF Localization (Map): This state estimator node accepts a variety of discrete pose-related data, as well as the state estimate produced by the Odom instance of this node to compute a continuous globally accurate robot state estimate.

NavSat Transform: This node NavSat data transform node accepts GPS position data and a continuous robot state estimate from the map frame (output of the Map instance of the EKF localization node) to calculate a transform between the GPS data in the WSG84 frame and the robot state in the map frame in order to appropriately incorporate the GPS data into the robot state estimate.

-

Visual Odometry: Visual odometry uses RGB and Depth data to build maps that estimate the robot’s local position and velocity in locally accurate frame (odom). Since the SLAM package Cartographer does not use RGB data to output the ’SLAM Odom Pose’ as show in the figure , we use visual odometry to use RGB data to improve the robot’s state estimate in the odom frame. This estimate is fed into the Odom EKF filter for state estimation.

Final Choice:

rtab_mapSelection Criteria: The selection of this package is based on compatibility with our chosen vision sensor which is the Realsense RGBD camera. This package provides an improvment to our state estimation methods, but there are no specific performance requirements that are pertinent to the project. The visual odometry package must provide state estimations in the odom frame, and shouln’t do full SLAM (involving loop closure, mapping) because this would expend an unnecessary amount processing power.

Selection Justification: We specifically look at packages with existing ROS integrations that do purely localization (or have the option to do so) and support RGBD cameras. The available remaining options are

rpg_svo,dso_ros,ros_mono_vo, andviso2_ros. Out of all of these packages, the only package that has thorough documentation, is maintained, and compatible with the newest version of ROS (melodic) isrtab_map. We choosertab_mapbecause it allows for easy integration, low risk due to regular maintenance, and has a dedicated pure localization node.

Overview of Propbot State Estimation Package

Overview of Propbot State Estimation Package

Selection Justification

For a detailed trade study, please refer to Section 4.1.1 in the "Design Specifications" document in Propbot/docs/Reports.

Many of the SLAM libraries that were found online only made use of an RGB-D camera or a monocular camera. We eliminate these as options for SLAM as we would like to achieve the best position estimate by using all of the sensors on-board.

All of the explored packages satisfy our required features, but Google Cartographer is chosen due to its usage of the most different input sensors, highest accuracy, and continued support with ROS. Figure shows an overview of the Google Cartographer package. It takes in sensor data and outputs Robot Localization (Robot Pose) for Propbot Mission and a Robot Map for Propbot Navigation.

Final Choice: Custom Library: propbot_mission

Selection Criteria

This package must be able to execute a mission that is created by a user. The specific requirements for this package are listed in the table.

| Required Feature | Justification |

|---|---|

| Package converts GPS coordinates of a mission to robot map waypoints and sends them to the navigation stack. | The user will set GPS waypoints using a GUI, so this package must be able to take in GPS waypoints and convert them to goals that the robot can interpret. |

| Package monitors mission progress (which waypoint the robot is travelling towards). | The user should be able to monitor the robot’s mission progress, so this package should be able to keep track of the robot’s progress. |

| Package allows the mission to be started, paused, and stopped at any time. | For safety purposes, the robot should be able to stop its mission at any time if commanded. In addition, to improve the user experience and safety, the mission should also be able to "pause", such that it can be restarted after an interruption (related to the user or an external factor). |

| Package should be able to receive a mission from Propbot GUI. | The package must be able to interface with the GUI, so a user can create a mission. |

Selection Justification

The only publicly available open-source package that had the GPS waypoint capability mentioned in the table is the package waypoint_nav (Charron 2018). This package is able to accept a text file full of GPS coordinates and synchronously send them to the robot’s navigation stack. This package sends a waypoint, waits for it to be reached, and then sends the next one. This is a blocking process, and the only way to stop the mission is to kill all the processes on the robot. So, this package does not allow for missions to be started, stopped, and paused at any time. In addition, this code had poor styling, was not modular, was difficult to read, and did not allow for expansion of features.

This is why we have implemented our own propbot_mission library to satisfy the requirements for this package. The design is described below.

Design

Propbot mission package software architecture

Propbot mission package software architecture

The propbot library architecture and functionality is shown in the figure . The classes present in this library are Waypoint, Mission, and MissionHandler. These classes were made with future developers at RSL in mind, as they have generic interfaces between each other that still allow for further improvement or added functionality in the future. The propbot mission library uses the Simple Action Client library by ROS to communicate with Propbot Navigation. It uses asynchronous communication to submit and cancel goals, and receive goal statuses. We chose to use asynchronous communication because of the reasons mentioned in the following Safety Feature.

The class that handles mission execution communicates with Propot Navigation, thus allowing for the the mission to be started and stopped at any given time. The use of this type of communication allows for a safe, clean, and predictable end to a robot’s mission.

If synchronous communication was used, killing all of the robot processes would be the only way to end the mission, and the exit behaviour would not be predictable. The exact functionality of the classes are further described below:

Waypoint: This is a container class that holds different waypoint types and handles the conversion between them. This class is constructed with a GPS coordinate, and it stores this information. This class uses the "GPS to Robot Map Transform" to convert its GPS coordinate to a waypoint on the Robot Map coordinate. This is used within the Mission Handler class. This satisfies the requirement in the table of being able to take in GPS waypoints and convert them into waypoints on the robot map. Since the

Waypointclass is a container class, it can be easily expanded upon in the future to include other types of coordinates.Mission: This is a container class that holds a vector of Waypoint objects and has an accessor to this vector. This structure was created to provide a standard data type for missions. Its contents and functionalities can easily be expanded upon in the future without affecting existing functions. This standard data type can easily be integrated with

Mapviz, thus satisfying the requirement in the table of being able to receive a mission from the GUI.Mission Handler: This class performs the majority of the mission execution function. It holds an instance of the

SimpleActionClient, and is configured to communicate with the Propbot Navigation (as seen in the figure with different asynchronous arrows). ThisSimpleActionClientis used to submit goals, cancel goals, and receive goal status from Propbot Navigation.SimpleActionClientis an asynchronous library, so goals can be submitted or cancelled at any time. However, to receive goal status notification, the class hasWaypointCallbackand this sends the next waypoint goal upon success, or ends the mission with a failure flag set. This class has aStart()function that starts the ‘SimpleActionClient‘ and sends the first waypoint. ThePause()function cancels all goals, but does not end the mission and retains the current waypoint index of the mission so it can be later resumed. TheStop()function cancels all goals and sets a mission finished flag to true. This satisifes the start/stop/pause requirements mentioned in the table . This class also outputs "Mission Progress" in the form of the current waypoint number, whether the mission has ended, and whether the mission has failed, thus also fulfilling the mission progress requirement.

Propbot Navigation package software architecture

Propbot Navigation package software architecture

The ROS move_base package provides a framework for robot navigation stack by linking various plugins to move a robot from its current position to a desired goal position. An overview of the move_base package is provided in the figure above. The package’s components are further described below:

Global Costmap: This node accepts an occupancy grid produced by the SLAM algorithm and "inflates" the cells of the grids that are marked "occupied" as to increase the perceived size of the obstacles. The "inflation radius" is adjusted to account for the size of the robot and the robot to obstacle distance requirement. The resulting occupancy grid is referred to as the Global Costmap. This is implemented using the standard ROS

2d_costmappackage.Local Costmap: This node accepts depth sensor data such as point clouds or laser scans produced by a RGB-Depth camera and Lidar to detect the obstacles that are within close range of the robot. The detected obstacles are then "inflated" with a "inflation radius" and the resulting occupancy grid is referred to as the Local Costmap. This is implemented using the standard ROS

2d_costmappackage.-

Global Planner: This node takes in a 2D costmap as an input and outputs a path between the robot’s current position and its goal position based on the given obstacle information and robot turn radius.

Final Choice:

A* algorithm with global_plannerSelection Criteria: The global planner must generate a route to each waypoint that does not collide with any obstacle’s in the robot’s costmap. This is the only required feature of the global planner and it satisfies F1.1, F1.2, and F1.3, which state that the robot shall drive through user-generated waypoints, not collide with obstacles, and will stop at predefined points of interest.

Selection Justification: Only algorithms that are integrated in the ROS environment were considered for this trade study to ensure time and complexity of the project was not drastically increased. The algorithms available are

A*andDjikstrain the ROSglobal_plannerpackage, and the simple euclidean distance measurement method in the ROS ‘carrot_planner‘ package. The ‘carrot_planner‘ package is not a reasonable option because it plans a path that is the shortest vector between the robot’s position and the goal waypoint, and does not plan around obstacles as required.Djikstrais a graph search algorithm that finds the shortest path between nodes. In general,A*is known to be better thanDjikstra, asA*is the same asDjikstra, but with an additional heuristic to search closest paths in distance first, thus resulting in faster search times (n.d.). This is suitable for our application as quick planning and re-planning will be required as the robot maps out its environment.

-

Local Planner: This node takes in a 2D costmap and the plan produced by the global planner as inputs and generates velocity commands. These velocity commands allow the robot to follow the planned route as close as possible while taking into the given obstacle information and kinematics of the robot.

Final Choice:

teb_local_plannerSelection Criteria: The local planner must provide velocity commands that are feasible for our differential drive robot and send velocity commands that traverse the global plan without encountering any obstacles. This is related to F1.2 and NF2.1, which state that the robot must avoid obstacles and stay 1m away from all obstacles at all times.

We look local planners that are already integrated in the ROS ecosytem to to ensure time and complexity of the project was not drastically increased. We also only look at local planners that are suitable for differential drive robots. There are 5 available packages that meet these conditions:

asr_ftc_local_planner,teb_local_planner,dwa_local_planner,eband_local_planer, andadaptive_local_planner. We can immediately eliminateasr_ftc_local_plannerandeband_local_planerbecause they do not do any obstacle avoidance. We can also eliminateadaptive_local_plannerbecause it is a package that is not maintained or documented, thus it is unreliable for long-term use, and a high risk option for implementation. The remaining options areteb_local_planneranddwa_local_planner.teb_local_plannerrefers to a "Time Elastic Band" planner, and it models the local path as an elastic band that optimizes for reaching the goal in the shortest amount of time while avoiding obstacles (“Teb_local_planner,” n.d.). Thedwa_local_plannerpackage implements a "dynamic window" of trajectories to evaluate and scores the trajectories based on distance to obstacles and proximity to the global path (“Dwa_local_planner,” n.d.). Both of these packages meet the bare requirements of creating suitable velocity commands of differential drive robots and avoiding obstacles. However,teb_local_planneris known to have better compute performance (S´anchez 2018), along with faster replanning and smoother trajectories (Cybulski, Wegierska, and Granosik 2019). In addition, it has a feature that allows for integration of dynamic obstacle avoidance (a feature that no other local planner compared above has), which will be useful for future tight integration of thepropbot_perceptionoutputs of dynamic obstacle locations and local planning. Since performance is important as we are constrained by the TX1 processing power (C1.3), and dynamic obstacle avoidance will be important for Propbot’s operation in dense campus environments, we chooseteb_local_planneras our local planner.

Recovery Behaviours Plugins: A list of recovery behaviours plugins which will be run if the navigation stack fails to generate a valid plan to the goal position. Currently our navigation stack uses the default plugins

conservative_resetandaggressive_resetwhich will first force the robot to clear all obstacles in its map that are more than 3m away, and then perform an in-place rotation. If the robot still fails to generate a plan, the map is cleared.

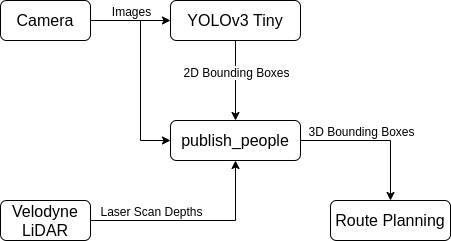

Final Choice: YOLOv3 (Tiny)

In order for the robot to effectively plan its route, it must intelligently identify obstacles in its path. Although the LiDAR will detect any obstacles the camera can, it is unable to distinguish between different types of obstacles. For example if there is a person on the side of a path, the LiDAR will detect the person like any other obstacle. Since the obstacle is not in the path of the robot’s motion no action is taken. However, this may result in a collision if the person suddenly moves into the robot’s path. In this scenario if the camera detects that the obstacle is a person then the robot can take the proper precautions to avoid them in case they move into the robot’s path.

To do the object detection and classification we choose to use YOLOv3. YOLOv3 is the latest iteration on the YOLO (You Only Look Once) algorithm. YOLOv3 stands out as having decently accurate predictions while taking less resources than competing algorithms like R-CNN or SSD (Redmon and Farhadi 2018). Compared to the competing algorithms, YOLOv3 only loses a small amount of accuracy in exchange for around double the speed of these algorithms. For example YOLOv3 scores a COCO mAP of 31 while taking 29 ms while RetinaNet-50 only gains 1 point at 32 mAP while taking 73 ms on the same hardware. (mAP = Mean Average Precision, a metric used for evaluating object detection algorithms). This is very important due the project constraints of using the Jetson TX-1 which means we have fairly limited computing resources. We evaluated YOLOv3 on the Jetson TX-1 using a demo application and found that it could reach at least 20 FPS in "Tiny" mode. Tiny mode reduces the mAP of YOLO down to 28 mAP while reducing inference time by about 30%. In practical use we found that "Tiny" mode still produced similar results to the regular YOLO on our data, so using this mode is preferred to reduce the load on the Jetson TX-1.

Another advantage of YOLOv3 is the ease of software integration. Most machine learning algorithms use frameworks like TensorFlow or Caffe. YOLOv3 is built as a single, small C library where the only major dependencies are OpenCV and CUDA both of which come pre-installed on the Jetson TX-1. This reduces the number of large dependencies we need to manage, thus reducing the project’s overall complexity.

Integration with Autonomy

Propbot Object Detection System Overview

Propbot Object Detection System Overview

A challenge with integrating YOLO into our autonomy stack is that YOLO was developed to work on 2D images or video. As a result YOLO only outputs bounding boxes in pixel coordinates on the image. For our purposes we would like the 3D coordinates of a detected object like a person in order to avoid them. To solve this issue we created a custom ROS node (publish_people) which converts the bounding boxes into 3D by fusing data from the LiDAR and YOLO together.

Projection of the bounding box from the image to 3D

Projection of the bounding box from the image to 3D

In order to find out the location of the detected object in 3D the robot must know its direction and its distance from it. The 2D bounding box provides the direction, the robot knows the direction its camera is pointing as well as the direction corresponding to each pixel using projection. Thus by projecting the corners of the bounding box it can figure out the direction of the detected object. This can then be used to find the corresponding depth values of the object from the LiDAR scan. These two pieces of information are enough to determine the location of the object in 3D space.

Since the robot knows the locations of dynamic obstacles around it and can track them over time, it can make simple predictions on where they will move next based on their speed. This information is used in the route planning to avoid moving into the path of these obstacles such as cars or pedestrians.

A person is detected on camera, they are then marked in 3D by a green cylinder

A person is detected on camera, they are then marked in 3D by a green cylinder

Final Choice: diff_drive_controller

Selection Criteria

PropBot is a six-wheeled differential drive system that has 4 motor-drive wheels. The controller for the system needs to take in a trajectory (velocity vector) and translate it to the required left and right wheel outputs.

Selection Justification

There are only two differential drive ROS packages (differential_drive and diff_drive_controller) with similar features, and both meet the selection criteria. However, only diff_drive_controller is currently maintained by the ROS community, is used by many other mobile robot projects, and is real-time suitable. Forums do suggest that diff_drive_controller is more difficult than differential_drive_controller. But since diff_drive_controller is real-time suitable and has more support available online, it is the best choice for our uses.

Final Choice: Custom firmware stack

Selection Criteria

The stack must be scalable to allow for more than four motors as the intention to add drivers to the middle wheels has been expressed, and portable to allow for various platforms to be used mostly interchangeably (e.g. Arduino platforms, ARM devices). In short, the stack must, to an extent, be hardware agnostic

Selection Justification

Although legacy code existed for the system, it would have to be rewritten for the integration of the RC interface. And, there are non-standard Arduino libraries that can be useful for motor control. However, the use of these Arduino-specific libraries locks the functionality to Arduino platforms.

Further, the firmware must be dynamic to allow for the addition of interfaces with multiple devices: motor drivers, peripheral sensors, autonomy computer, e-stop, remote controller.

Layered Vehicle Interface Firmware Stack

The figure shown in figure illustrates the layers of abstraction that exist within the firmware.

The layered vehicle interface firmware stack illustrated in is further explained below:

I/O At the top level, there is the I/O layer. This layer establishes the interface specifications of all peripheral devices: remote controller, autonomy computer, motor drivers, and e-stop. This can be expanded to included the ultrasonic sensor network or other peripheral devices in the future.

Wheel Models Next is the wheel models. In this layer, custom data structures for wheel motion commands are defined along with several default wheel modes. Further, pins and registers associated with the wheel motors are pulled from the I/O and mapped to the wheel "indices". This ensures that the model layer is agnostic to the pin and register values. This layer is completely reusable and portable across architectures.

Motion Commands The motion command layer deals with the translation of the generalized motor commands to motor driver commands. The setup allows easy integration of new motor drivers, as the interface for the device only needs to be defined and then the commands can be appropriately translated. To increase separation from the hardware, custom wrappers for interrupt enabling/disabling and pin mode setting will be integrated into the I/O which the command layer will pull from. This will render the command layer fully hardware agnostic.

Configuration The configuration layer is the most intertwined with hardware. It would have to be modified when porting to a different architecture, but can remain relatively the same for porting across platforms of the same architecture (in this case, Arduino platforms). The interfaces are initialized and peripherals are configured (GPIO, timers, interrupts, etc.) so the layer is hardware dependent.

Logarithmically Scaled Speed Commands

The manual controller, shown in figure 7 communicates over RC pulses. Wheel speed values are determined by the left or right joystick position with respect to the calibrated maximum and minimum. Initially, the RC value was mapped linearly to a duty cycle or speed value. However, a user will most likely not drive the robot at maximal speed. Further, increasing the resolution of lower speed commands allows for higher maneuverability at medium to low speeds. To achieve this, the mapping between the RC command values and the speed values is logarithmically scaled with user-selectable parameters. The user can change the maximum duty value and logarithmic scale factor to change the mapping. The figure below shows an example of how the mapping works with the user parameters.

The RC outputs are logarithmically mapped such that the majority of the joystick movement range is centred around fairly low speeds. This ensures that it is difficult for the user to accidentally increase the speed of the robot.

Front Ultrasonic Sensor Logic

Assembling an ultrasonic sensor at the front of the robot can help the robot detect the obstacle ahead. In the vehicle interface firmware stack, the ultrasonic sensor keeps running, measuring the distance to the obstacle ahead, and sending the distance to the vehicle interface via I2C (Inter-integrated Circuit) protocol. If the distance to the obstacle is less than or equal to twenty-five centimeters, the vehicle interface sends ’all-brake’ command to the wheels, which means shutting down the car immediately. The reason for setting twenty-five centimeters as the threshold value is because such as distance is the minimum distance that the ultrasonic sensor can detect (“MB1202 Datasheet,” n.d.).

Final choice: rosserial

Selection Criteria

We are using Arduino UNO as the vehicle interface, the package for building this communication channel shall be compatible with Arduino boards. Also, we are running Linux operating system in the autonomy computer, the package shall be able to operate in Linux system as well.

Selection Justification

There is only one ROS package that satisfies the criteria above, which is the rosserial. This ROS package is not only compatible with ROS Melodic in Linux system, but also supports Arduino boards including Arduino UNO (“Rosserial Package Summary,” n.d.).

Further, the rosserial package limits the number of ROS topics’ publishers and subscribers at twenty-five (“Rosserial Package Summary,” n.d.), which is much more than what we need in this communication channel. In this communication channel, we only need three publishers and three subscribers. Figure illustrates the architecture of this channel.

Communication channel’s architecture

Communication channel’s architecture

With respect to the autonomy computer, the vehicle interface is a listener or ROS topics’ subscriber. The user sends the left wheels’ speed, right wheels’ speed, and wheels’ direction in the three subscribers’ ROS topics respectively. With respect to the wheels, the vehicle interface is a talker or ROS topics’ publisher. After receiving messages from the subscriber’s topics, the vehicle interface publishes those messages to the wheels directly.

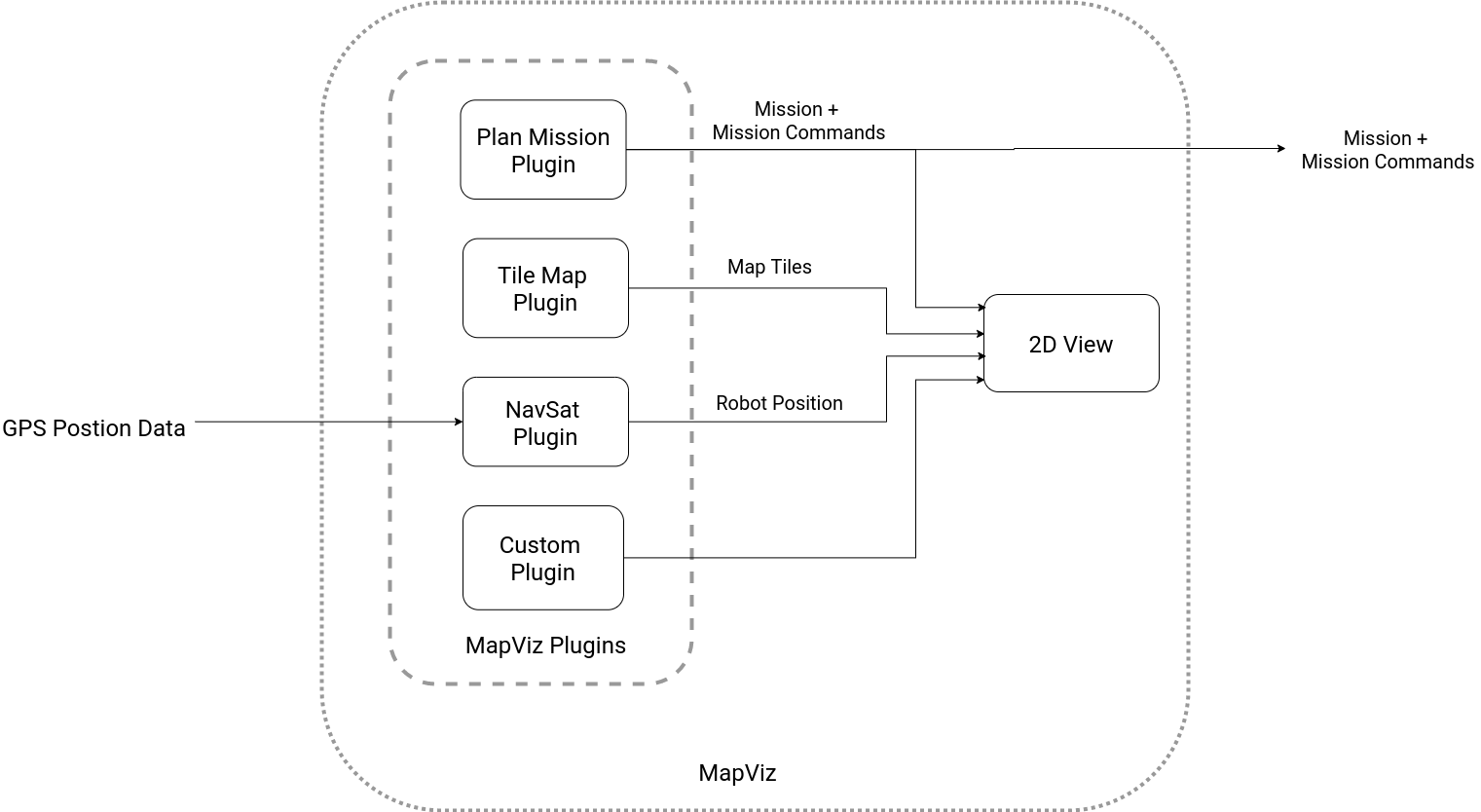

Final choice: Mapviz

The GUI is pictured in the figure 8.

MapViz Mission Command GUI

MapViz Mission Command GUI

Selection Criteria

The selection criteria for the Propbot GUI are shown in the table .

| Required Feature | Justification |

|---|---|

| GUI shows all configurable features on a single screen | GUI should be intuitive and require little to no configuration in order to improve the efficiency of the data collection process. |

| GUI displays location and route progress of the robot | Robot location is parameter that affects the wireless propagation tests conducted by the RSL lab, and the visibility of the route progress improves ease of use and in turn efficiency of the collection process. |

| GUI allows for the integration of custom plugins | This is a long term project which is planned to be completed after several capstone terms, so future teams should have the ability to integrate new autonomy features and new tests for the RSL |

| GUI is device agnostic | This is a long term project which is planned to be completed after several capstone terms, so future teams should have the ability to replace the existing hardware such as the autonomy computer when more autonomy features are implemented and the current processing power is found to be limiting |

Selection Justification

For a detailed trade study, please refer to Section 4.3.1 in the "Design Specifications" document in Propbot/docs/Reports.

MapViz is a ROS 2D visualization tool which loads plugins dynamically at runtime. This allows the developer to choose which features are included in the GUI and for future capstone teams or RSL members to extend the functionality of the GUI without modifying the MapViz source code. The other GUI option that was explored had been created specifically for drone autopilots which mean that it includes features and configuration fields that are not applicable to a ground robot. Moreover, this option was not device agnostic whereas MapViz can communicate with any computer that has ROS installed.

Design

The design of the Propbot GUI package is shown in the figure . The components are described below:

Plan Mission Plugin: This is a plugin we created to allow the user to set waypoints on the GUI and upload them to the robot.

Tile Map Plugin: This is an existing plugin package which obtains map tiles from Bing Maps, WMTS Tile service, or Stamen Design and displays them on the GUI.

Custom Plugin: This is a place holder for addition plugins which may be added by future teams.

2D View: This is the main GUI display.

Design overview of Propbot GUI

Design overview of Propbot GUI

Final choice: ROS Network

Selection Criteria

The selection criteria for the protocol are shown in the table .

| Required Feature | Justification |

|---|---|

| Protocol should allow the robot to communicate with the mission command centre over a local network | Short range communication is adequate for our project scope as the robot operator will be less than 5m away from the robot at all times. |

Selection Justification

A pair of ROS enabled requires minimal Network setup to configure bi-directional connectivity with basic ROS Network protocols. This protocol requires configuration on both the master and the slave however, this is adequate for our scope as only one robot will be connected.

In order to remove hardware factors from our software testing we will use simulation. Fortunately, ROS provides a standard set of high quality simulation/introspection tools. These include the Gazebo simulator and Rviz data visualizer which both integrate seamlessly into the ROS environment. The integration of these tools are handled by our own software packages: Propbot Gazebo, Propbot Description, and Propbot Rviz.

The figure below shows a high level diagram of how the simulation is used.

Overview of the Simulation Design

Overview of the Simulation Design

In simulation mode, Gazebo provides the following: simulated world, simulated robot, simulated sensors. The simulated world is the sandbox world the robot is inside, it can be changed to be similar to the expected real world environment. Simulated worlds can range from a simple field to detailed 3D scans of museums or other real world locations. The simulated robot uses a 3D model of Propbot equipped with the majority of our sensor suite which inclues 3D lidar, RGB-Depth camera, GPS and IMU. This is part of our package.

Propbot CAD model and the corresponding simulated model in Rviz

Propbot CAD model and the corresponding simulated model in Rviz

For both visual and validation purposes, the 3D model of Propbot was integrated into the simulations. This involved utilizing the existing SolidWorks CAD to generate essentially a parsable description of the robot’s structure, dynamics, and appearance.

Robot Structure

Example of a stereolithography (STL) mesh of a CAD model

Example of a stereolithography (STL) mesh of a CAD model

Figure shows an example of how a CAD model is converted into a mesh of triangular planes. The data size of a mesh is significantly less than that of a complete CAD as it only characterizes geometry. The lightweight nature of the meshes allow models to be integrated into simulations with ease. Then, colours, textures, and dynamics can be integrated as a layer on top of the geometry. So, to integrate the robot’s structure, the CAD was converted into a series of STL meshes. Then, the meshes were symbolically linked together to form the geometry of the entire robot.

Robot Dynamics

Although integrating a visual representation of the robot into the simulations is trivial, the description of a body’s dynamics is not as so. For this reason, the CAD was split into pieces so that their unique dynamics could be computed and the various joint types could be defined (e.g. rotation for wheels vs. fixed for different parts of the chassis). Using a SolidWorks plugin to export designs to a format compatible with ROS (“SolidWorks to Urdf Exporter,” n.d.), the CAD was converted to links to meshes with hard-coded numerical values for the inertia and collision characteristics.

Unfortunately, shortcomings in the CAD, e.g. unconventional axes and lack of material integration, affected the performance of the simulated model. Nonetheless, we worked with the values we could extract from the exported model, and integrated them into a custom model. From there, the dynamics performance was improved by modifying and manually tuning orientations, origin placement, and scaling of the mass and moments of inertia. It is recommended that this be remedied in the future via improvements to the CAD to ensure that it appropriately reflect the robot’s mass and inertial characteristics.

Wheel Dynamics

The various parts of the robot’s chassis were integrated as a set of static joints, as it is one large piece. The wheels, however, need to be fixed except for on their axle. Further, the real robot has only four motors to drive six wheels, with the central wheels acting as passive stabilizers. To characterize the mass, inertia, and driving characteristics of the wheels and robot, both passive (free-wheeling, not driven) and actuated wheel model descriptions were created an utilized.

The actuated wheels were then linked to the controller so that motion commands from the autonomy stack could be sent, allowing the robot’s operation to be simulated.

Accounting for Friction

The affects of friction vary greatly depending on the load, driving surface, incline, weather conditions, etc. To account for some conditions, ballpark values were defined for the wheel models so that sliding was mitigated.

Sensor Mounting

Placement orientation of the RGB-D camera and the Velodyne Puck in Rviz

Placement orientation of the RGB-D camera and the Velodyne Puck in Rviz

Gazebo Simulated Environment

Gazebo Simulated Environment

Not only can the robot’s geometry be modeled, but so can the sensors. Both the Velodyne Puck and the Realsense RGB-D camera were integrated into the simulated robot model. Figure shows the orientation specifications and placement of the sensors in both the Rviz and Gazebo simulation environments. The models used reflect the dimensions and mass of the real sensors.

Each sensor is simulated with a Gazebo Sensor Plugins which enables the robot to read data from the simulated world and react accordingly. For example if a garbage can is in front of the robot in the simulated world, then the simulated LiDAR would show a cylindrical object. Moreover, each sensor plugin is selected and tuned to mimic the properties and specifications of our selected sensor models.

The simulated sensors provide data to our software which performs navigation and routing as if it was running on the real robot. Our software’s movement decisions are fed back into the simulator which controls the simulated robot’s movement. The simulated real world can be viewed through Gazebo’s GUI, which shows a bird’s eye view of the robot and the simulated world. The data from both the simulated sensors and calculations output from our software can be visualized by Rviz, this makes it easy to understand what the robot is seeing. The robot interaction betwen the robot and the simulated world is illustrated in figure .

Point cloud visualization in Rviz: 3D Lidar (red), RGB-Depth camera (black)

Point cloud visualization in Rviz: 3D Lidar (red), RGB-Depth camera (black)

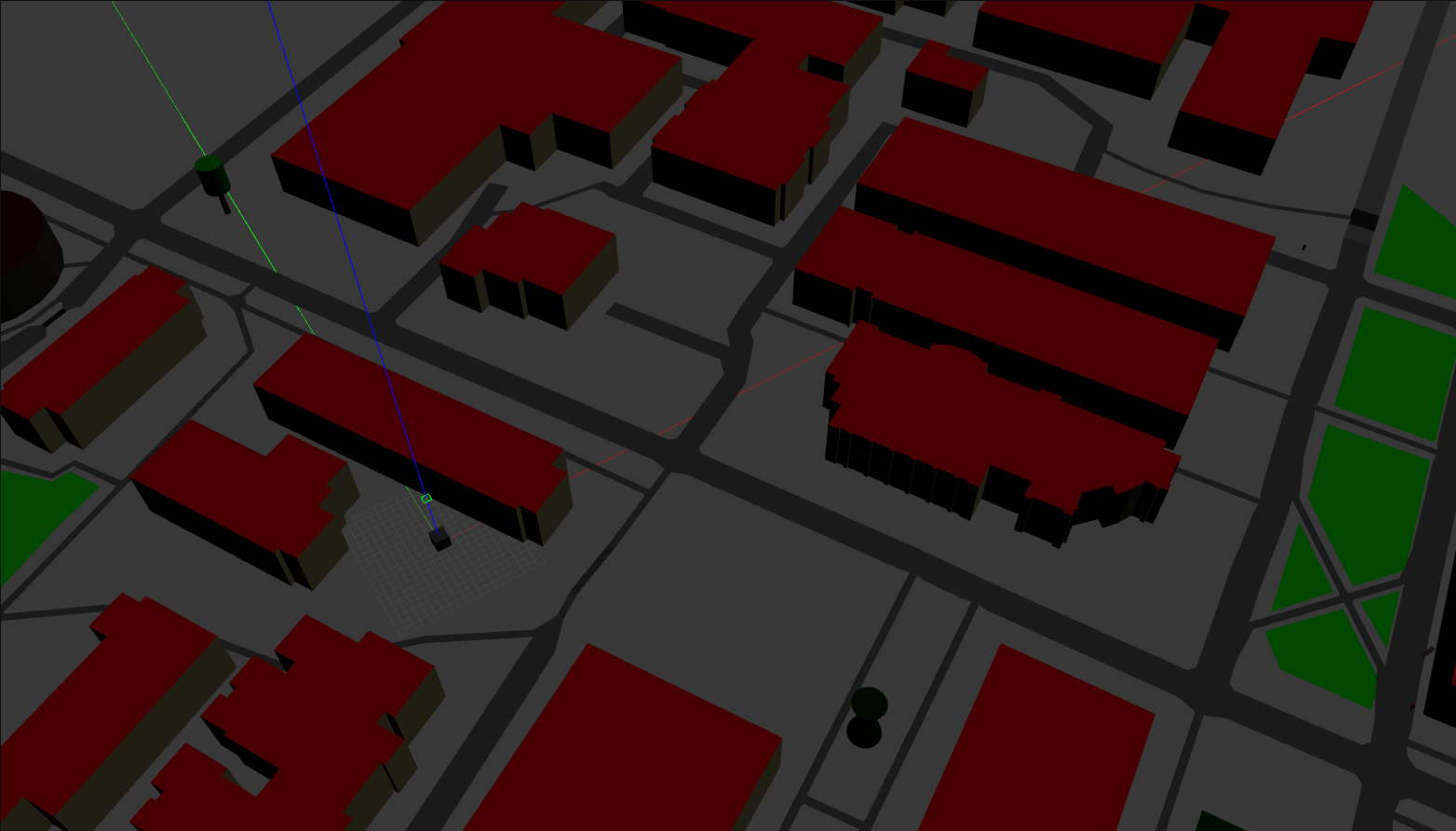

Gazebo Simulated Environment

Gazebo Simulated Environment

The Gazebo simulation environment can be populated with obstacles from the Gazebo model database or custom obstacles we construct from 3-D mesh files. Our custom environment is created by downloading geographic data from OpenStreetMaps (OSM) and converting this data into a 3-D model using the OSM2World converter. The generated 3-D model is populated with building structures, roads, and trees just as they appear on the real map and can be added to the Gazebo "world" where additional dynamic and static obstacles are inserted to create a more realistic simulation.

Google Earth

Google Earth

Gazebo Simulated Environment

Gazebo Simulated Environment

The robot contains a large number of different software components, many of which are very computationally intensive. One concerns with this is that our Jetson TX1 autonomy computer would not be able to handle the load of all of these software components. After implementing all of our features we tested the whole system on the Jetson TX1. To do the test we ran the simulation software on a separate computer connected via LAN to the Jetson TX1. The Jetson TX1 ran the autonomy software, just like in the actual robot. This is done since the simulation on its own is as intensive to run as the full autonomy software.

Process Monitor on the Jetson TX1

Process Monitor on the Jetson TX1

From the test found that the Jetson TX1’s CPU was pinned at 100% the entire time on all 4 cores. These are the top 5 components that took up the most processor time:

Move Base for robot control (70% total on 2 cores)

Visual Odometry (65% total on 2 cores)

Darknet ROS/YOLO for the dynamic obstacle detection (60% on 1 core)

Google Cartographer (40% total on 2 cores)

Pointcloud to Laserscan conversion (36% on 2 core)

In addition to this we saw that the simulation was not running around half speed and multiple software components were complaining about insufficient update rate. Despite this, the simulation was able to run slowly and the robot could move a bit. Therefore we can conclude that the Jetson TX1 is just barely insufficient to run the autonomy software. It is possible that optimizing the software a bit more would allow the TX1 to run it. However in a safety critical system it is better to over-provision to ensure reliable operation. Therefore our recommendation would be to upgrade to the Jetson TX2 which has much greater computational power.