-

Notifications

You must be signed in to change notification settings - Fork 17

Integration with SP

Related artifacts and wiki pages:

Implementation by @tsoenen:

I have implemented these changes (some testing is still needed, but seems ok overall). I have adjusted the proposed key and values a bit to make it more generic. For each connection point in each CNF in the service, there are three envs:

- _<cp_id>_ip: contains the floating ip of the loadbalancer of the cnf

- _<cp_id>_port: contains the port of that connection point

- _<cp_id>_type: container For each connection point in each VNF in the service, there are two envs:

- _<cp_id>_ip: contains the ip that this connection point maps on

- _<cp_id>_type: vm

I used '_' instead of '.' as seperator as version can contain a '.', making it difficult to split the values. The other extra fields (type, port) should reduce the data needed in the descriptors. We are always using the ip of the load balancer, even if cnfs are on the same cluster, for consistency. What do you think? Whenever you want, we can test it (only pre-int for now).

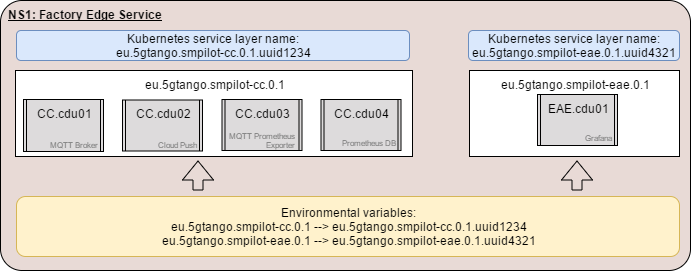

The SP will automate the deployment of NS and VNFs on Kubernetes by doing the following:

- Each CDU is deployed as a separate container, but all CDUs of one CNF are deployed in one pod, which is described in a k8s deployment

- Each CNF has its own k8s service, which allows accessing the pod from another pod using the service name (and Kubernetes DNS)

- When starting a CNF (or shortly after via a rolling update), the SP will provide the service names of all other CNFs in the NS as environmental variables (key, value pairs)

- The key is

vendor.name.versionof the CNF, which is specified in the descriptors and known by the CNFs (may also be configurable as when starting the CNF) - The values are the k8s service names of each CNF, which are created and can be decided by the SP (but should be unique), e.g.,

vendor.name.version.some_uuid - In doing so, each CNF can simply connect to another CNF within the same NS by checking the value with the k8s service name using the CNF's vendor, name, version as key.

- The EAE knows it wants to talk to the CC's Prometheus DB (CDU 4)

- It also knows that the CC's

vendor.name.versioniseu.5gtango.smpilot-cc.0.1and that the DB's port is 9090. This info is hardcoded or passed as environmental variable by the developer. - The service platform dynamically decides the unique Kubernetes service name using some UUID and provides this service name to all involved CNFs.

- By checking the value for env var

eu.5gtango.smpilot-cc.0.1, the EAE knows that the CC's k8s service name iseu.5gtango.smpilot-cc.0.1.uuid1234. - It can now easily reach the CC's DB at

eu.5gtango.smpilot-cc.0.1.uuid1234:9090.