-

Notifications

You must be signed in to change notification settings - Fork 2

Software Resources

- Kanban Flow - in progress task info

- CIRC Guide - sensor, motor, and communication information

- Docker docs - reference for additional information on Docker (check-in onboarding materials for set up)

- Google Docs Documentation Folder - use this folder for storing any in-progress documentation

- Linux CLI Tutorial and Linux CLI reference - for anyone looking to start from scratch or a refresher on Linux command line

- ROS Tutorials - get started with ROS

- Gazebo - Practice Arm Setup - practice for getting comfortable with gazebo

- Gazebo - Practice Base Setup - practice for getting comfortable with gazebo

Here is a log of the onboarding materials from 2023-2024

This week of materials is purely for practice working in Linux (Ubuntu) and setting up ROS. We have live USBs prepared with ROS installed so if you have a windows computer, Pg 8-50 are not necessary. On Mac with M chips, UTM virtual machine is recommended. Week 1: Setup Ubuntu & ROS, ROS & Git Basics

This week focuses on going through ROS tutorials. Week 2: Linux, More ROS, Code Walkthrough

This final week does a surface-level look at robotics concepts applicable to our team. There is a lot more than we can go into, so if you want to explore a topic deeper, you can either ask for or present a concept deep-dive at our team meetings! Week 3. Robotics Theories, Sensor overview, and Task Outlook

This section of the documentation will describe the process for configuring the Ublox GPS/GLONASS to broadcast data using ROStopic on ROS Noetic.

The following should be installed on your system before you can proceed with this guide:

- ROS Noetic

sudo apt-get install ros-noetic-ublox*

After Plugging the Ublox module to your computer, you can check the port it is connected to using the following command in Terminal:

dmesg | grep ttyIf the module is detected, you should see one of the output lines saying that it is connected to an usb port. Copy the port name (e.g. ttyACM0)

Change the permission of the port using the following command such that we can access the port later (change ttyACM0 to the port name previously found):

chmod a+rw /dev/ttyACM0Create a new file titled ublox_gps.launch. The file should contain the following xml code (change the device (port) name to the one previously):

<launch>

<arg name="node_name" default="ublox" />

<arg name="output" default="screen" />

<arg name="respawn" default="true" />

<arg name="respawn_delay" default="30" />

<arg name="clear_params" default="true" />

<node pkg="ublox_gps" type="ublox_gps" name="$(arg node_name)"

output="$(arg output)"

clear_params="$(arg clear_params)"

respawn="$(arg respawn)"

respawn_delay="$(arg respawn_delay)">

<!-- <rosparam command="load"

file="$(arg param_file_dir)/$(arg param_file_name).yaml" /> -->

<rosparam>

debug: 1

device: /dev/ttyACM0

frame_id: gps

gnss:

gps: true

</rosparam>

</node>

</launch>Run the launch file using the following command (modify accordingly if you are in a different directory):

roslaunch ublox_gps.launchTo verify that the data is being broadcasted, open a new terminal and launch roscore:

roscoreIn another terminal, list the available topics:

rostopic listYou should find a topic named ublox_gps if the launch file ran successfully.

To view the data being broadcasted, use the following command:

rostopic echo ublox_gps- Ensure that no "result.jpg" file exists at ~/rover_ws/src/rsx-rover. Delete "result.jpg" if needed.

- Start zed camera on jetson with

roslaunch rover zed_odom.launch- Run the panorama script at the location using

rosrun rover panorama.py- Once the code finishes, find the panorama at ~/rover_ws/src/rsx-rover/result.jpg

(Written by: Jo K.)

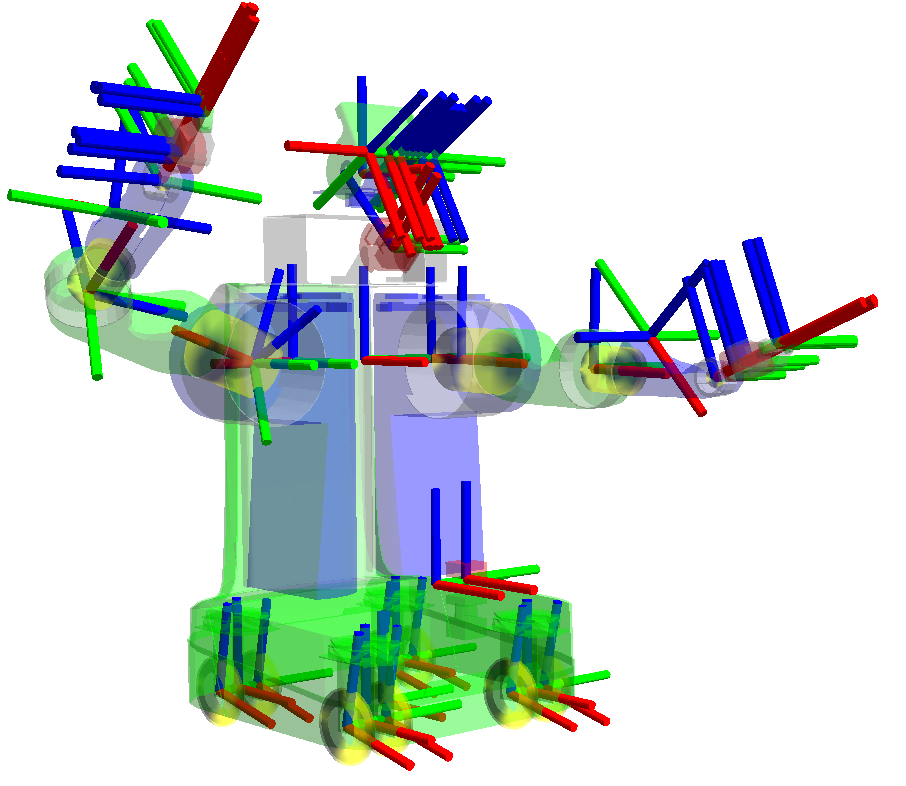

There are multiple simulations program available such as Gazebo, VREP, Webot (there are models of NASA mars rover there too), or even game engine. Here I will talk about how to build a simulation to run on Gazebo that runs with ROS control (cause ROS generally uses Gazebo simulation). To understand the simulation files and how they work, you should know about TF, URDF, and ROS control. Going through their tutorial will help significantly. I will also mention how to properly convert the SolidWorks file to STL file with the correct coordinate.

Note: We work with Gazebo Classic (11.x)

For Software Team:

- Know TF, URDF, and ROS Control

For Mech Team:

- Know how to convert Solidworks file (or whatever CAD program you use) into STL file. All we need is that mesh file, but you have to set the origin coordinate of that mesh file correctly so it makes software life easier. Going through TF and URDF will give you more ideas. Also know robotics kinematics and transformation really well would help.

| Link | Description |

|---|---|

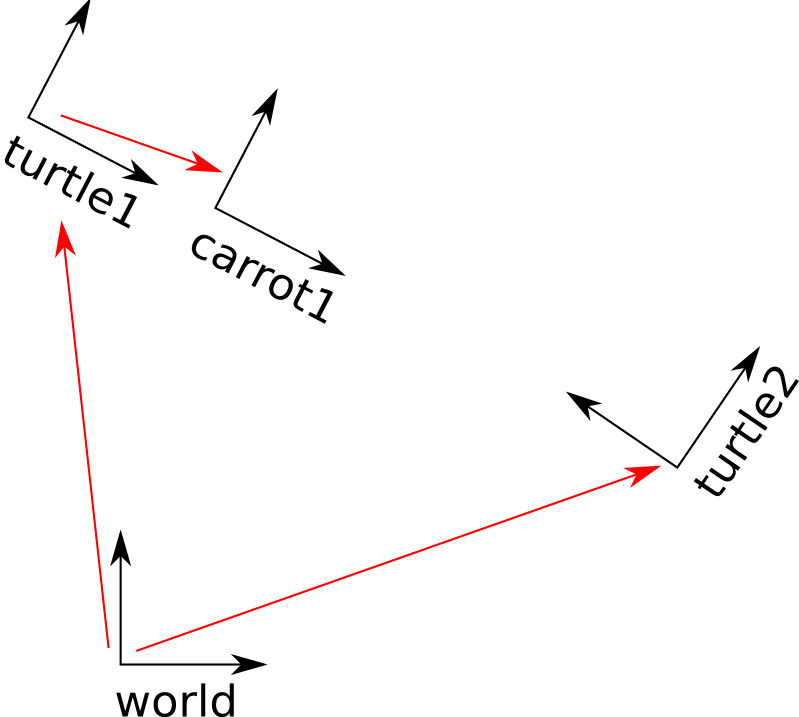

| TF TF2 | Transformation packages used for keeping track of transforms, note that tf2 is more up to date but both packages can be used |

| URDF Xacro | URDF and Xacro parsing packages |

| Gazebo Classic Docs | Gazebo Tutorials for ROS |

| ROS Control Gazebo ROS Control | Packages for control interfaces for ROS and ROS w/ Gazebo |

| Moveit | Moveit Tutorials, specifically note Moveit Setup Assistant - used for motion planning |

| Gazebo Sensor Plugins | Information on how to integrate sensors into gazebo |

- This ROS library pretty much does all the forward and inverse kinematic for you. This will help you understand how the link and joint works as well as where they are when you create a URDF file. Also good for debugging.

- The library can help you transform from Row Pitch Yaw (RPY) to Quaternion and vice versa

- Note that in URDF, it use RPY format to form link and joint from parent link to child link

URDF (Universal Robot Description Format) is an XML code that specifies the links, joints, looks, etc. of robot (as well as transmission which tell which joint are controllable in ros control)

Xacro is just something like URDF, but it allows more programming capability (like make function, or import file). Here I won't be talking much about xacro cause it's just like urdf. Once you understand URDF, xacro just help organize the file so it's totally recommend you learn that too. Xacro

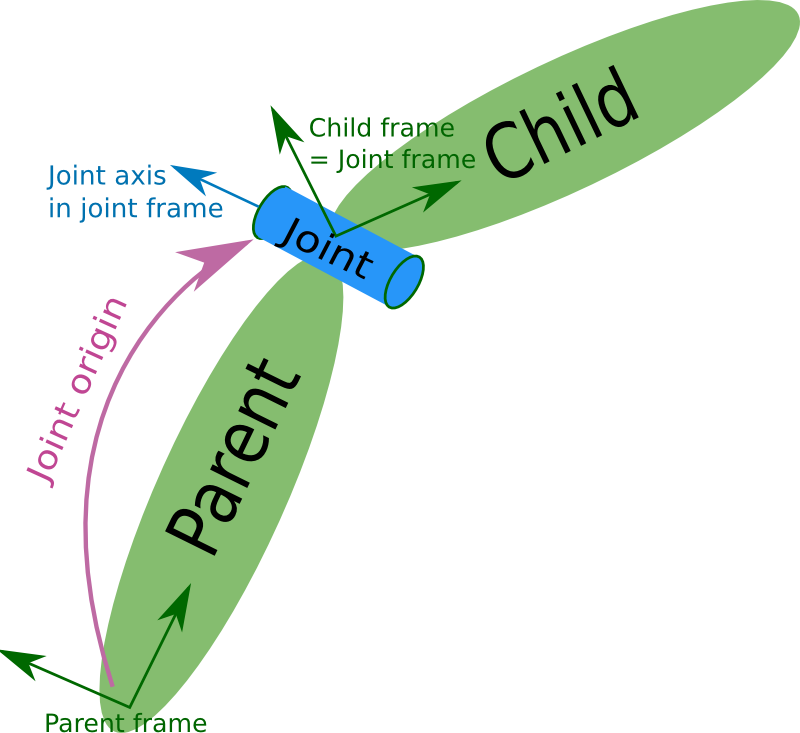

Just like TF, URDF specify a relationship between joint and link

For giving name to robot

<robot name="rrbot" xmlns:xacro="http://www.ros.org/wiki/xacro">

If want robot to be fixed to world. Create a link that have joint between it and the world

<link name="world"/>

<joint name="fixed" type="fixed">

<parent link="world"/>

<child link="link1"/>

</joint>

Else for other links

<!-- Base Link -->

<link name="link1">

<collision>

<origin xyz="0 0 ${height1/2}" rpy="0 0 0"/>

<geometry>

<box size="${width} ${width} ${height1}"/>

</geometry>

</collision>

<visual>

<origin xyz="0 0 ${height1/2}" rpy="0 0 0"/>

<geometry>

<box size="${width} ${width} ${height1}"/>

</geometry>

<material name="orange"/>

</visual>

<inertial>

<origin xyz="0 0 1" rpy="0 0 0"/>

<mass value="1"/>

<inertia

ixx="1.0" ixy="0.0" ixz="0.0"

iyy="1.0" iyz="0.0"

izz="1.0"/>

</inertial>

You can check all the available tags on URDF page. Collision tell how it will collide. Visual tell how it should look like which is showed in Gazebo. Usually visual will be the mesh file. The collision could be mesh file, but if file is big or you want to optimize the simulation (cause collision of mesh will take some computation) some people just use simple shapes like boxes or cylinder as collision.

<joint name="joint2" type="continuous">

<parent link="link2"/>

<child link="link3"/>

<origin xyz="0 ${width} ${height2 - axel_offset*2}" rpy="0 0 0"/>

<axis xyz="0 1 0"/>

<dynamics damping="0.7"/>

</joint>

Usually just need parent, child, origin, and axis

For those mechanical, you may want to see URDF part to get the idea of how the link and joint works. Pretty much the origin of the STL file will be the starting point and the software have to match the joints and links of all of them. Therefore, it's best to make the origin of that STL the axis of rotation / joint of that part.

- when saving as STL, click option

- checking “Do not translate STL output data to positive space” will make it use the origin coordinate of Solidworks

- or just choose output coordinate system at the bottom (if you made one) so this way you can design the part using any coordinate system and create the one you want to use for STL

- To check if coordinate is right, just open the STL file with Solidworks, and see where origin is

Now to create ros control, there are 2 ways

- Just use Moveit Setup Assistant and follow their tutorial

- Do it yourself, which I'm going to cover here

First, to allow ros control for both real and simulate robot, add a transmission tag to your URDF file

<transmission name="tran1">

<type>transmission_interface/SimpleTransmission</type>

<joint name="joint1">

<hardwareInterface>EffortJointInterface</hardwareInterface>

</joint>

<actuator name="motor1">

<hardwareInterface>EffortJointInterface</hardwareInterface>

<mechanicalReduction>1</mechanicalReduction>

</actuator>

</transmission>

If you are dealing with a real robot, change the EffortJointInterface in hardwareInterface tag to hardware_interface/EffortJointInterface. Transmission Note that you can change EffortJointInterface to whatever controller you want such as VelocityJointInterface if you want to control joint velocity. See available controller here

Also if you are doing this in Gazebo, add the gazebo ros control plugin as well. Don't forget to download it through apt-get install if you don't have it.

<gazebo>

<plugin name="gazebo_ros_control" filename="libgazebo_ros_control.so">

<robotNamespace>/YourRobotName</robotNamespace>

</plugin>

</gazebo>

Now we need to create a .yaml file. Put this in a config folder. Just look here to see how your files should be structured. The yaml file has the parameter that specify the type of controller, joints it's controlling, and other tuning parameters like PID. The file looks like this:

rrbot:

# Publish all joint states -----------------------------------

joint_state_controller:

type: joint_state_controller/JointStateController

publish_rate: 50

# Position Controllers ---------------------------------------

joint1_position_controller:

type: effort_controllers/JointPositionController

joint: joint1

pid: {p: 100.0, i: 0.01, d: 10.0}

joint2_position_controller:

type: effort_controllers/JointPositionController

joint: joint2

pid: {p: 100.0, i: 0.01, d: 10.0}

What you see there is the robot name of rrbot corresponding to robot name in urdf file, the joint state controller that publish all joint state (so other controller can use and it will help you debug), and 2 position controller for joint11 and joint2 (make sure those joints have transmission tag in urdf) Note that there's a ros rqt that let you try tuning the paremeter while robot is running (I think). Else you could just try tuning it yourself.

Now you can create a launch file that run all those controllers. This is what it'll look like

<launch>

<!-- Load joint controller configurations from YAML file to parameter server -->

<rosparam file="$(find rrbot_control)/config/rrbot_control.yaml" command="load"/>

<!-- load the controllers -->

<node name="controller_spawner" pkg="controller_manager" type="spawner" respawn="false"

output="screen" ns="/rrbot" args="joint1_position_controller joint2_position_controller joint_state_controller"/>

<!-- convert joint states to TF transforms for rviz, etc -->

<node name="robot_state_publisher" pkg="robot_state_publisher" type="robot_state_publisher"

respawn="false" output="screen">

<remap from="/joint_states" to="/rrbot/joint_states" />

</node>

</launch>

in the argument, pass the argument as all the names of the controllers in the yaml file. Now you can run the simulation and the file. Note that you can control those joint that has controller by publishing through its topic (which should end with name cmd I think, but that's for most case). Some controller though (such as trajectory controller) may be control through ros service.

A few thing to note about Gazebo is that you can command it through rosservice (which is request and response action). Just try rosservice list and you can see so many option such as resetting the world.

- A urdf file with simulation of a robot base.

- a launch file.

- a config folder with yaml file.

- Hector libraries which can be installed by running the following command in the terminal:

sudo apt-get install ros-noetic-hector-gazebo-plugins

Copy and paste the following plugin code in the urdf file:

<gazebo>

<plugin filename="libhector_gazebo_ros_imu.so"

name="hector_imu_plugin" >

<alwaysOn>true</alwaysOn>

<bodyName>imu_link</bodyName>

<topicName>imu</topicName>

<serviceName>/imu/calibrate</serviceName>

<gaussianNoise>0.0</gaussianNoise>

<updateRate>5.0</updateRate>

<frameName>imu_link</frameName>

<!-- Accelerometer config, 3 values are corresponding

to x,y,z axis respectively -->

<accelOffset>0 0 0 </accelOffset>

<accelGaussianNoise>0 0 0</accelGaussianNoise>

<accelDrift>0 0 0 </accelDrift>

<accelDriftFrequency>0 0 0</accelDriftFrequency>

<!-- Gyroscope config -->

<rateOffset>0 0 0</rateOffset>

<rateGaussianNoise> 0 0 0</rateGaussianNoise>

<rateDrift>0 0 0 </rateDrift>

<rateDriftFrequency> 0 0 0</rateDriftFrequency>

<!-- Separate error model for YAW angle -->

<yawOffset> 0 </yawOffset>

<yawGaussianNoise> 0 </yawGaussianNoise>

<yawDrift> 0 </yawDrift>

<yawDriftFrequency> 0 </yawDriftFrequency>

</plugin>

</gazebo>

The link and joint code for IMU:

<link name="imu_link">

<collision>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.05 0.05 0.05"/>

</geometry>

</collision>

<visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.05 0.05 0.05"/>

</geometry>

<material name="red"/>

</visual>

<inertial>

<mass value="1e-5" />

<origin xyz="0 0 0" rpy="0 0 0"/>

<inertia ixx="1e-6" ixy="0" ixz="0" iyy="1e-6" iyz="0" izz="1e-6" />

</inertial>

</link>

<joint name="imu_joint" type="fixed">

<origin

xyz="0.0 0 0.0"

rpy="1.5707963267949 0 0" />

<parent

link="base_link" />

<child

link="imu_link" />

<axis

xyz="0 0 0" />

</joint>

Change the name of parent link to your robot's base link.

Note: Don't forget to change the values of rpy (in the tag) to change the orientation of the sensor according to your robot simulation. If the imu node publishes approximately -9.8 as the z acceleration value, the orientation is probably right.

The code for GPS plugin is as follows:

<gazebo>

<plugin name="gps_controller" filename="libhector_gazebo_ros_gps.so">

<robotNamespace>/</robotNamespace>

<updateRate>40</updateRate>

<bodyName>base_link</bodyName>

<frameId>base_link</frameId>

<topicName>navsat/fix</topicName>

<velocityTopicName>navsat/vel</velocityTopicName>

<referenceLatitude>50.0</referenceLatitude>

<referenceLongitude>60.0</referenceLongitude>

<referenceHeading>0</referenceHeading>

<referenceAltitude>0</referenceAltitude>

<drift>0.0001 0.0001 0.0001</drift>

</plugin>

</gazebo>

Change base_link to your robot's base link.

The link and joint:

<link name="gps_link">

<collision>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.05 0.05 0.05"/>

</geometry>

</collision>

<visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<box size="0.05 0.05 0.05"/>

</geometry>

<material name="red"/>

</visual>

<inertial>

<mass value="1e-5" />

<origin xyz="0 0 0" rpy="0 0 0"/>

<inertia ixx="1e-6" ixy="0" ixz="0" iyy="1e-6" iyz="0" izz="1e-6" />

</inertial>

</link>

<joint name="gps_joint" type="fixed">

<origin

xyz="0.0 0 0.278189004081027"

rpy="0 0 0" />

<parent

link="base_link" />

<child

link="gps_link" />

<axis

xyz="0 0 0" />

</joint>

Change base_link to your robot's base link.

Plugin Code:

<gazebo reference="lidar_link">

<sensor type="gpu_ray" name="os1_lidar">

<pose>0 0 0 0 0 0</pose>

<visualize>true</visualize>

<update_rate>10</update_rate>

<ray>

<scan>

<horizontal>

<samples>512</samples>

<resolution>1</resolution>

<min_angle>-3.141529</min_angle>

<max_angle>3.141529</max_angle>

</horizontal>

<vertical>

<samples>64</samples>

<resolution>1</resolution>

<min_angle>-3.141529</min_angle>

<max_angle>-3.141529</max_angle>

</vertical>

</scan>

<range>

<min>0.9</min>

<max>75.0</max>

<resolution>0.03</resolution>

</range>

</ray>

<plugin name="gazebo_ros_head_hokuyo_controller" filename="libgazebo_ros_gpu_laser.so">

<topicName>/rrbot/laser/scan</topicName>

<frameName>hokuyo_link</frameName>

</plugin>

</sensor>

</gazebo>

Note: <range> --> <max> tag can be changed to increase or decrease the radius sensed by the LIDAR.

The link and joint for LIDAR:

<link name="lidar_link">

<inertial>

<mass value="0.425"/>

<origin xyz="0 0 0.03675" rpy="0 0 0" />

<inertia ixx="0.000308429" ixy="0" ixz="0"

iyy="0.000308429" iyz="0" izz="0.00034589"/>

</inertial>

<collision>

<origin xyz="0 0 0.03675" rpy="0 0 0" />

<geometry>

<mesh filename="package://mobile_manipulator_body/meshes/os1_64.stl" />

</geometry>

</collision>

<visual>

<origin xyz="0 0 0" rpy="0 0 0"/>

<geometry>

<mesh filename="package://mobile_manipulator_body/meshes/os1_64.stl" />

</geometry>

</visual>

</link>

<joint name="lidar_joint" type="fixed">

<parent link="base_link"/>

<child link="lidar_link"/>

<axis

xyz="0 0 0" />

<origin

xyz="0.0 0 0.278189004081027"

rpy="0 0 0" />

</joint>

Change base_link to your robot's base link.

The mesh file for LIDAR can be found here

Paste this file in your meshes folder.

- Download the ekf.yaml from this ekf file.

- Move this file to your config folder.

- Copy the following code in the .launch file in your launch folder:

<node pkg="robot_localization" type="ekf_localization_node" name="ekf_odom_node" output="screen" >

<param name="frequency" value="30"/>

<param name="sensor_timeout" value="0.1"/>

<param name="two_d_mode" value="true"/>

<remap from="odometry/filtered" to="odom/ekf/enc_imu"/>

<!-- <param name="map_frame" value="map"/> -->

<param name="odom_frame" value="odom"/>

<param name="base_link_frame" value="base_link"/>

<param name="world_frame" value="odom"/>

<param name="transform_time_offset" value="0.0"/>

<param name="odom0" value="/rr_openrover_driver/odom_encoder"/>

<param name="odom0_differential" value="false" />

<param name="odom0_relative" value="false" />

<param name="odom0_queue_size" value="10" />

<rosparam param="odom0_config">[false, false, false,

false, false, false,

true, true, false,

false, false, true,

false, false, false]</rosparam>

<param name="imu0" value="/imu"/>

<param name="imu0_differential" value="false" />

<param name="imu0_relative" value="true" />

<param name="imu0_queue_size" value="10" />

<param name="imu0_remove_gravitational_acceleration" value="true" />

<rosparam param="imu0_config">[false, false, false,

false, false, false,

false, false, false,

true , true, true,

true, true, true]</rosparam>

<param name="print_diagnostics" value="true" />

<param name="debug" value="false" />

<param name="debug_out_file" value="debug_odom_ekf.txt" />

<rosparam param="process_noise_covariance">[0.05, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0.05, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.025,0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.025,0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.04, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.02, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.005]</rosparam>

<rosparam param="initial_estimate_covariance">[1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1 , 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1 , 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1 , 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9]</rosparam>

</node>

<node pkg="robot_localization" type="navsat_transform_node" name="navsat_transform_node" respawn="true">

<param name="magnetic_declination_radians" value="0"/>

<param name="yaw_offset" value="0"/>

<remap from="/imu/data" to="/imu" />

<remap from="/gps/fix" to="/navsat/fix" />

<remap from="/odometry/filtered" to="/odom/ekf/enc_imu" />

</node>

<node pkg="robot_localization" type="navsat_transform_node" name="random" respawn="true">

<rosparam param="odomN_config">[true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false]</rosparam>

<param name="odomN_differential" value="false"/>

</node>

<node pkg="nmea_navsat_driver" type="nmea_serial_driver" name="navsat" respawn="true">

<param name="port" value="/dev/ttyUSB0"/>

<param name="baud" value="19200"/>

</node>

- Change the transform node names based on your node names in the following lines of code:

<param name="imu0" value="/your_imu_node"/>

<remap from="/imu/data" to="/your_imu_node" />

<remap from="/gps/fix" to="/your_gps_node" />

- Done! Now you will be able to find the pose data in odom/ekf/enc_imu node and the imu and gps data will be more accurate.

These steps closely follow https://vimeo.com/58409707 and https://github.com/AS4SR/general_info/wiki/Creating-Heightmaps-for-Gazebo

This tutorial was performed on a computer running Ubuntu 20.04 LTS and Gazebo 11.

The GNU Image Manipulation Program (GIMP) was used for editing the grayscale image. If you do not already have it, install it on your machine.

This website can be used to create the heightmap image of any location in the world. If you want to create a random image use the Render->Noice->Difference Clouds Filter in GNU Gimp.

This is the heightmap for Atacama Desert (Mars like environment)

Once you have retrieved a suitable grayscale image, open it up in GIMP.

In order for Gazebo to use a grayscale as a heightmap, it must meet three conditions:

- The height and width must be equal (i.e. image must be square)

- The size of a side must satisfy pixels = 2^n+1, n = 1,2,3,... ; Based on these, the gray-scale can be of sizes 3 x 3, 5 x 5, 9 x 9, and so on. (129 x 129, 257 x 257, 513 x 513 are good sizes)

- The image must be an 8-bit image (pixels of are 8-bit unsigned integers a.k.a. 'uint8')

For 1 and 2, right click on the heightmap -> image -> Scale Image and put 129 x 129 (or 257 x 257, 513 x 513, etc.) in the height and width.

For 3, right click on the heightmap -> image -> precision -> select 8-bit

Note: Don't forget to right click on the image layer and select Remove Alpha Channel

To save the image go to file -> export as... and export it to the desktop as "YOURFILENAME.png". You can then exit GIMP.

Open up a terminal and change the directory to gazebo's model directory:

cd /usr/share/gazebo-11/models

The easiest way to create a new model is to copy an existing model, and then modify it. The best model in the directory to do this with is ground_plane:

sudo cp -r ground_plane/ YOURFILENAME

Go into the directory and list the files within:

cd YOURFILENAME/

ls

Two of the files in this directory should be model.config and model.sdf. First open up model.config in a text editor and change the meta information contained within to something appropriate for this model. It is important to note that the entry in the space will be the name that appears in Gazebo's model menu. When you're done, save and close the file.

Next we need to modify model.sdf. Within the file at this point is the collision and visual definitions of the ground_plane model (standard to SDF and URDF files) from which we copied it. These are significantly different from what we need for a heightmap, and so we will need to copy from a more appropriate source. Enter the following into the terminal:

sudo cp /usr/share/gazebo-11/worlds/heightmap.world model.sdf

When you open model.sdf, you will need to delete several portions that are not part of the heightmap defintion. Delete the items (both at the beginning and the end), the entire block, and all of the box objects. When you are done, the file will look like this:

<?xml version="1.0" ?>

<sdf version="1.5">

<model name="heightmap">

<static>true</static>

<link name="link">

<collision name="collision">

<geometry>

<heightmap>

<uri>file://media/materials/textures/heightmap_bowl.png</uri>

<size>129 129 10</size>

<pos>0 0 0</pos>

</heightmap>

</geometry>

</collision>

<visual name="visual_abcedf">

<geometry>

<heightmap>

<use_terrain_paging>false</use_terrain_paging>

<texture>

<diffuse>file://media/materials/textures/dirt_diffusespecular.png</diffuse>

<normal>file://media/materials/textures/flat_normal.png</normal>

<size>1</size>

</texture>

<texture>

<diffuse>file://media/materials/textures/grass_diffusespecular.png</diffuse>

<normal>file://media/materials/textures/flat_normal.png</normal>

<size>1</size>

</texture>

<texture>

<diffuse>file://media/materials/textures/fungus_diffusespecular.png</diffuse>

<normal>file://media/materials/textures/flat_normal.png</normal>

<size>1</size>

</texture>

<blend>

<min_height>2</min_height>

<fade_dist>5</fade_dist>

</blend>

<blend>

<min_height>4</min_height>

<fade_dist>5</fade_dist>

</blend>

<uri>file://media/materials/textures/heightmap_bowl.png</uri>

<size>129 129 10</size>

<pos>0 0 0</pos>

</heightmap>

</geometry>

</visual>

</link>

</model>

</sdf>

Next, you will need to add the appropriate and for the heightmap. In both the collision and visual definitions, change the so that it points to the model folder (don't worry that we don't yet have a /materials/textures/ path and the greyscale is not yet in the directory), and change the to the desired size (in meters) for the simulation; the third number is the elevation of the highest point:

<uri>model://YOURFILENAME/materials/textures/YOURFILENAME.png</uri>

<size>YOURSIZE1 YOURSIZE2 YOURHEIGHT</size>

(Note: the two sizes here do not have to match)

Now we will move the greyscale image into the correct location. Create the materials/textures directory and move the greyscale image into it

sudo mkdir -p materials/textures/

sudo mv ~/Desktop/YOURFILENAME.png materials/textures

Now we can try to load it into Gazebo. Run gazebo:

gazebo

In the insert tab on the right side of the screen, find your model.

When you find it, click its name and then click in the simulation window. The model will then spawn into the world (this may take a while depending on the size of your heightmap). You should now have a terrain heightmap in your Gazebo world.

The hector-mapping ROS package (Hector SLAM) allows for occupancy grid generation using solely lidar data (odometry is not required). To use this package successfully with the Ouster OS0 LIDAR, its data must be converted from the pointcloud2 message type to the laserscan message type. This is done with the package pointcloud-to-laserscan.

Install the required dependencies for ROS Noetic:

sudo apt install ros-noetic-pointcloud-to-lasercloud

sudo apt install ros-noetic-hector-mappingIf a rosbag with recorded lidar pointcloud data is being used, run the rosbag in the terminal:

rosbag play <rosbag> --clock

# To loop the rosbag infinitely, use -l

rosbag play <rosbag> -l --clockExample rosbags are available here.

Run the launch file:

roslaunch hector_slam_occupancy_grid.launchRviz should open automatically and a occupancy map should be generated.

If real time lidar data is being used, first run the lidar:

If an occupancy map does not appear in Rviz, try the following:

Run rqt_graph and check the ROS graph

rqt_graphThe graph should look similar to this:

Check the tf tree with the following:

sudo apt install ros-noetic-tf2-tools

rosrun tf2_tools view_frames.py

firefox frames.pdfThe tf tree should look similar to this:

If a rosbag is being used, make sure use_sim_time is set to true.

rosparam set use_sim_time true- Hector SLAM Documentation

- Pointcloud to Laserscan Documentation

- Hector SLAM Implementation for the RPLidar

- Hector SLAM Quickstart

To launch the camera in regular mode, run: roslaunch realsense2_camera rs_camera.launch filters:=pointcloud

To launch the camera to access IR data: roslaunch realsense2_camera rs_camera.launch enable_infra:=true enable_infra1:=true enable_infra2:=true depth_width:=640 depth_height:=480 depth_fps:=30 infra_width:=640 infra_height:=480 infra_fps:=30 infra_rgb:=false enable_sync:=true align_depth:=true

Note that for the IR data, all parameters related to IR need to be specified (see related issue).

Alternatively, add the parameters above to the rs_camera.launch file. Run rviz to visualize the stream. To view the topic, go to add --> by topic --> add relevant image topic

An easy way to create a custom object detection model is by using RoboFlow and Ultralytics' YOLOV8 Library. Here's the process:

Object Detection Pipeline:

flowchart TD;

A[Image Collection]

A --> B[Image Preprocessing and Labeling with Roboflow]

B --> C[Model Training with Ultralytics]

C --> D[Testing, Evaluation, and Fine-tuning with Ultralytics]

D --> E[Hardware Deployment]

Gather images. Consider using image data sets, APIs from image sites and Roboflow Universe. Aim for 200+ clear, high quality images.

Use Roboflow to label your images (draw bounding boxes around classes you want to detect). This can be done manually or automated by tools such as Roboflow Annotate.

Remove any images which are of poor quality or not ideal for model training. Use Roboflow to split your dataset into Training/Validation/Testing subsets.

If desired, Roboflow can also be used to apply image transformations and augment the raw images in the dataset.

Once done, make sure to Roboflow exports the dataset in the YOLOv8 Format. The export's file structure should be similar to this:

├───test

│ ├───images

│ └───labels

├───train

│ ├───images

│ └───labels

├───valid

│ ├───images

│ └───labels

│

│───data.yaml <--- Note this filename.

.

.

Note the name of the .yaml file as it will be needed for training.

# Creating a virtual Python environment is optional, but recommended to

# isolate any installed packages from the rest of your system.

python -m venv <directory-name>

# Source activate file for your OS.

source myvenv/bin/activate # For Linux

venv\Scripts\activate.bat # For Windows CMD

# Install the Ultralytics Python package.

pip install ultralyticsFollowing code was adapted from the Ultralytics Documentation.

from ultralytics import YOLO

# Load the default, pretrained YOLOv8 Nano Model.

model = YOLO('yolov8n.pt')

# Train and fine-tune the default model on your custom dataset.

# Specify the path to the .yaml file in your Roboflow export.

training_results = model.train(data='your-roboflow-file.yaml', epochs=3)

# Evaluate model with validation set

validation_results = model.val()

# Specify an inference source (.jpg, webcam frame, Youtube URL, image directory, etc.)

inference_source = 'test-image.jpg'

# Test model on source and display source with results (bounding boxes).

model.predict(inference_source, show=True)Once the model is sufficiently trained, use export() to export the model to a format suitable for your hardware.

model.export(format='tflite') # Export to TensorFlow LiteRefer to this script to use the custom model on real-time footage from the Intel RealSense D435i.