-

Notifications

You must be signed in to change notification settings - Fork 238

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

Showing

13 changed files

with

429 additions

and

15 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

182 changes: 182 additions & 0 deletions

182

apps/opik-documentation/documentation/docs/cookbook/ollama.ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,182 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# Using Opik with Ollama\n", | ||

| "\n", | ||

| "[Ollama](https://ollama.com/) allows users to run, interact with, and deploy AI models locally on their machines without the need for complex infrastructure or cloud dependencies.\n", | ||

| "\n", | ||

| "In this notebook, we will showcase how to log Ollama LLM calls using Opik by utilizing either the OpenAI or LangChain libraries.\n", | ||

| "\n", | ||

| "## Getting started\n", | ||

| "\n", | ||

| "### Configure Ollama\n", | ||

| "\n", | ||

| "In order to interact with Ollama from Python, we will to have Ollama running on our machine. You can learn more about how to install and run Ollama in the [quickstart guide](https://github.com/ollama/ollama/blob/main/README.md#quickstart).\n", | ||

| "\n", | ||

| "### Configuring Opik\n", | ||

| "\n", | ||

| "Opik is available as a fully open source local installation or using Comet.com as a hosted solution. The easiest way to get started with Opik is by creating a free Comet account at comet.com.\n", | ||

| "\n", | ||

| "If you'd like to self-host Opik, you can learn more about the self-hosting options [here](https://www.comet.com/docs/opik/self-host/overview).\n", | ||

| "\n", | ||

| "In addition, you will need to install and configure the Opik Python package:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install --upgrade --quiet opik\n", | ||

| "\n", | ||

| "import opik\n", | ||

| "opik.configure()" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Tracking Ollama calls made with OpenAI\n", | ||

| "\n", | ||

| "Ollama is compatible with the OpenAI format and can be used with the OpenAI Python library. You can therefore leverage the Opik integration for OpenAI to trace your Ollama calls:\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from openai import OpenAI\n", | ||

| "from opik.integrations.openai import track_openai\n", | ||

| "\n", | ||

| "import os\n", | ||

| "os.environ['OPIK_PROJECT_NAME'] = 'ollama-integration'\n", | ||

| "\n", | ||

| "# Create an OpenAI client\n", | ||

| "client = OpenAI(\n", | ||

| " base_url='http://localhost:11434/v1/',\n", | ||

| "\n", | ||

| " # required but ignored\n", | ||

| " api_key='ollama',\n", | ||

| ")\n", | ||

| "\n", | ||

| "# Log all traces made to with the OpenAI client to Opik\n", | ||

| "client = track_openai(client)\n", | ||

| "\n", | ||

| "# call the local ollama model using the OpenAI client\n", | ||

| "chat_completion = client.chat.completions.create(\n", | ||

| " messages=[\n", | ||

| " {\n", | ||

| " 'role': 'user',\n", | ||

| " 'content': 'Say this is a test',\n", | ||

| " }\n", | ||

| " ],\n", | ||

| " model='llama3.1',\n", | ||

| ")\n", | ||

| "\n", | ||

| "print(chat_completion.choices[0].message.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "Your LLM call is now traced and logged to the Opik platform." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Tracking Ollama calls made with LangChain\n", | ||

| "\n", | ||

| "In order to trace Ollama calls made with LangChain, you will need to first install the `langchain-ollama` package:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install --quiet --upgrade langchain-ollama" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "You will now be able to use the `OpikTracer` class to log all your Ollama calls made with LangChain to Opik:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from langchain_ollama import ChatOllama\n", | ||

| "from opik.integrations.langchain import OpikTracer\n", | ||

| "\n", | ||

| "# Create the Opik tracer\n", | ||

| "opik_tracer = OpikTracer(tags=[\"langchain\", \"ollama\"])\n", | ||

| "\n", | ||

| "# Create the Ollama model and configure it to use the Opik tracer\n", | ||

| "llm = ChatOllama(\n", | ||

| " model=\"llama3.1\",\n", | ||

| " temperature=0,\n", | ||

| ").with_config({\"callbacks\": [opik_tracer]})\n", | ||

| "\n", | ||

| "# Call the Ollama model\n", | ||

| "messages = [\n", | ||

| " (\n", | ||

| " \"system\",\n", | ||

| " \"You are a helpful assistant that translates English to French. Translate the user sentence.\",\n", | ||

| " ),\n", | ||

| " (\n", | ||

| " \"human\",\n", | ||

| " \"I love programming.\",\n", | ||

| " ),\n", | ||

| "]\n", | ||

| "ai_msg = llm.invoke(messages)\n", | ||

| "ai_msg" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

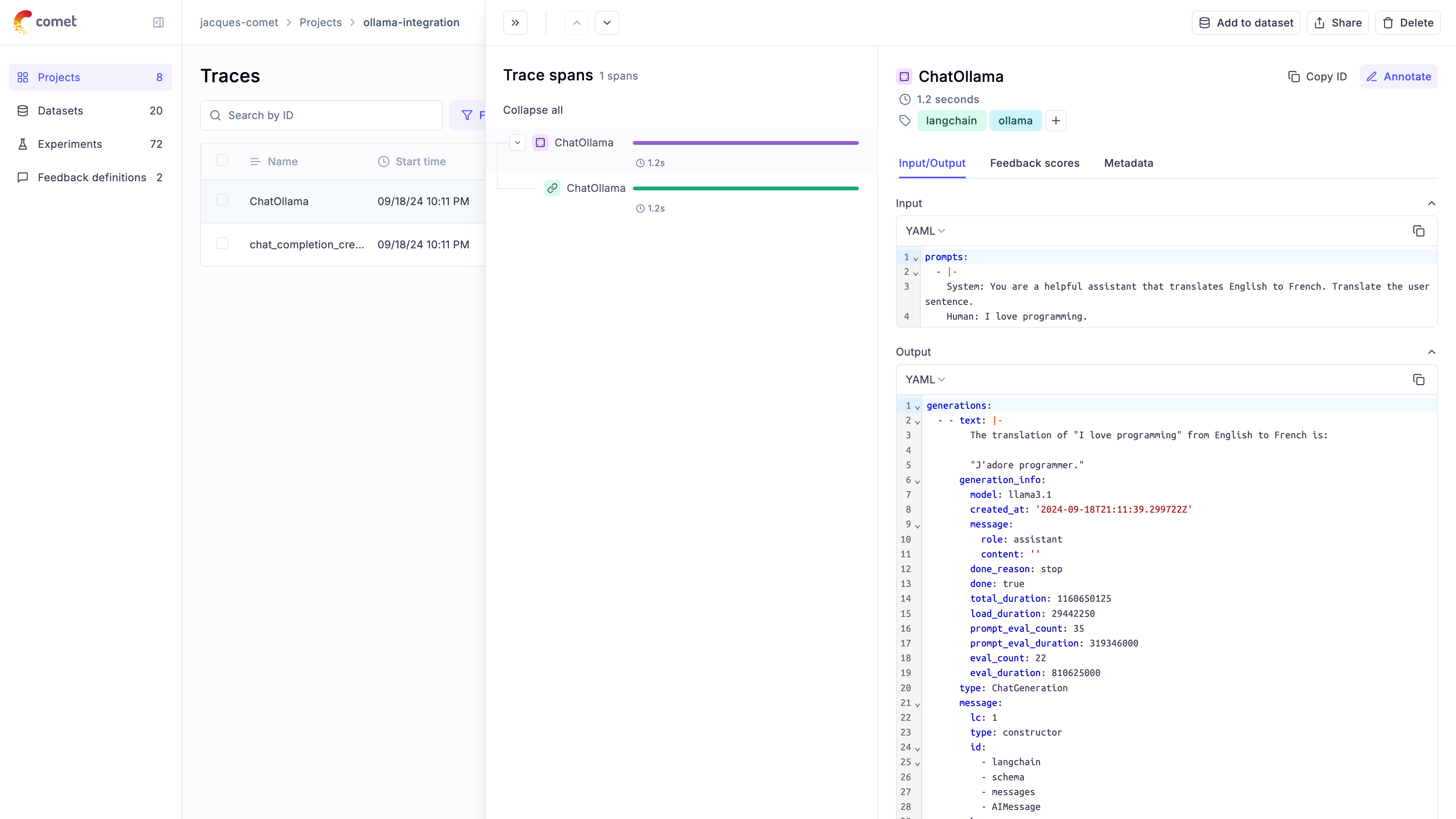

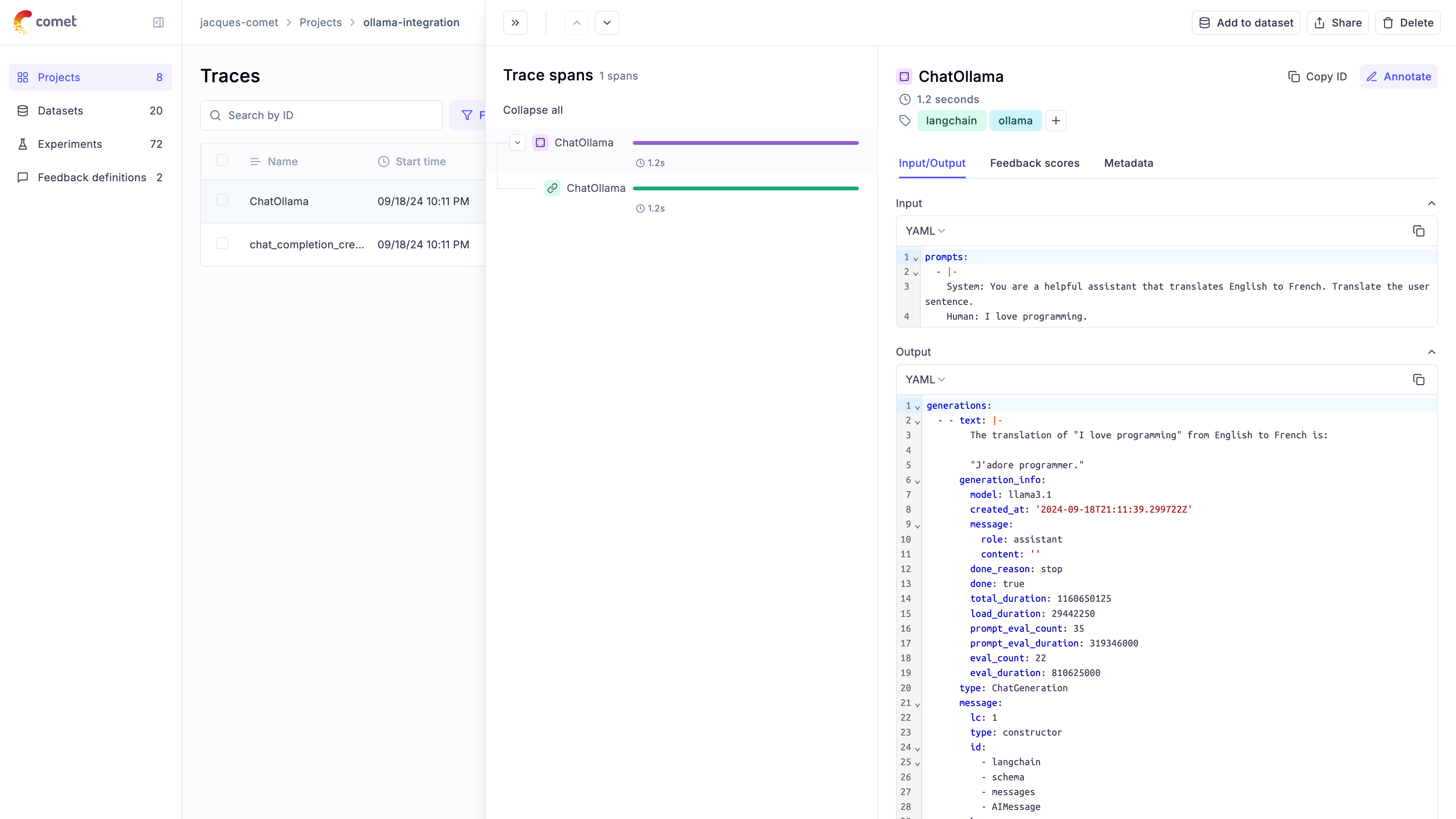

| "You can now go to the Opik app to see the trace:\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": "py312_llm_eval", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.12.4" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 2 | ||

| } |

111 changes: 111 additions & 0 deletions

111

apps/opik-documentation/documentation/docs/cookbook/ollama.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,111 @@ | ||

| # Using Opik with Ollama | ||

|

|

||

| [Ollama](https://ollama.com/) allows users to run, interact with, and deploy AI models locally on their machines without the need for complex infrastructure or cloud dependencies. | ||

|

|

||

| In this notebook, we will showcase how to log Ollama LLM calls using Opik by utilizing either the OpenAI or LangChain libraries. | ||

|

|

||

| ## Getting started | ||

|

|

||

| ### Configure Ollama | ||

|

|

||

| In order to interact with Ollama from Python, we will to have Ollama running on our machine. You can learn more about how to install and run Ollama in the [quickstart guide](https://github.com/ollama/ollama/blob/main/README.md#quickstart). | ||

|

|

||

| ### Configuring Opik | ||

|

|

||

| Opik is available as a fully open source local installation or using Comet.com as a hosted solution. The easiest way to get started with Opik is by creating a free Comet account at comet.com. | ||

|

|

||

| If you'd like to self-host Opik, you can learn more about the self-hosting options [here](https://www.comet.com/docs/opik/self-host/overview). | ||

|

|

||

| In addition, you will need to install and configure the Opik Python package: | ||

|

|

||

|

|

||

| ```python | ||

| %pip install --upgrade --quiet opik | ||

|

|

||

| import opik | ||

| opik.configure() | ||

| ``` | ||

|

|

||

| ## Tracking Ollama calls made with OpenAI | ||

|

|

||

| Ollama is compatible with the OpenAI format and can be used with the OpenAI Python library. You can therefore leverage the Opik integration for OpenAI to trace your Ollama calls: | ||

|

|

||

|

|

||

|

|

||

| ```python | ||

| from openai import OpenAI | ||

| from opik.integrations.openai import track_openai | ||

|

|

||

| import os | ||

| os.environ['OPIK_PROJECT_NAME'] = 'ollama-integration' | ||

|

|

||

| # Create an OpenAI client | ||

| client = OpenAI( | ||

| base_url='http://localhost:11434/v1/', | ||

|

|

||

| # required but ignored | ||

| api_key='ollama', | ||

| ) | ||

|

|

||

| # Log all traces made to with the OpenAI client to Opik | ||

| client = track_openai(client) | ||

|

|

||

| # call the local ollama model using the OpenAI client | ||

| chat_completion = client.chat.completions.create( | ||

| messages=[ | ||

| { | ||

| 'role': 'user', | ||

| 'content': 'Say this is a test', | ||

| } | ||

| ], | ||

| model='llama3.1', | ||

| ) | ||

|

|

||

| print(chat_completion.choices[0].message.content) | ||

| ``` | ||

|

|

||

| Your LLM call is now traced and logged to the Opik platform. | ||

|

|

||

| ## Tracking Ollama calls made with LangChain | ||

|

|

||

| In order to trace Ollama calls made with LangChain, you will need to first install the `langchain-ollama` package: | ||

|

|

||

|

|

||

| ```python | ||

| %pip install --quiet --upgrade langchain-ollama | ||

| ``` | ||

|

|

||

| You will now be able to use the `OpikTracer` class to log all your Ollama calls made with LangChain to Opik: | ||

|

|

||

|

|

||

| ```python | ||

| from langchain_ollama import ChatOllama | ||

| from opik.integrations.langchain import OpikTracer | ||

|

|

||

| # Create the Opik tracer | ||

| opik_tracer = OpikTracer(tags=["langchain", "ollama"]) | ||

|

|

||

| # Create the Ollama model and configure it to use the Opik tracer | ||

| llm = ChatOllama( | ||

| model="llama3.1", | ||

| temperature=0, | ||

| ).with_config({"callbacks": [opik_tracer]}) | ||

|

|

||

| # Call the Ollama model | ||

| messages = [ | ||

| ( | ||

| "system", | ||

| "You are a helpful assistant that translates English to French. Translate the user sentence.", | ||

| ), | ||

| ( | ||

| "human", | ||

| "I love programming.", | ||

| ), | ||

| ] | ||

| ai_msg = llm.invoke(messages) | ||

| ai_msg | ||

| ``` | ||

|

|

||

| You can now go to the Opik app to see the trace: | ||

|

|

||

|  |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.