-

Notifications

You must be signed in to change notification settings - Fork 288

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

* Add first draft of watsonx cookbook * Add first draft of watsonx integration doc * Add last missing links

- Loading branch information

1 parent

71d2bd4

commit 0e113b1

Showing

8 changed files

with

449 additions

and

1 deletion.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

217 changes: 217 additions & 0 deletions

217

apps/opik-documentation/documentation/docs/cookbook/watsonx.ipynb

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,217 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "# Using Opik with watsonx\n", | ||

| "\n", | ||

| "Opik integrates with watsonx to provide a simple way to log traces for all watsonx LLM calls. This works for all watsonx models." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Creating an account on Comet.com\n", | ||

| "\n", | ||

| "[Comet](https://www.comet.com/site?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) provides a hosted version of the Opik platform, [simply create an account](https://www.comet.com/signup?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) and grab you API Key.\n", | ||

| "\n", | ||

| "> You can also run the Opik platform locally, see the [installation guide](https://www.comet.com/docs/opik/self-host/overview/?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) for more information." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install --upgrade opik litellm" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import opik\n", | ||

| "\n", | ||

| "opik.configure(use_local=False)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Preparing our environment\n", | ||

| "\n", | ||

| "First, we will set up our watsonx API keys." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import os\n", | ||

| "\n", | ||

| "os.environ[\"WATSONX_URL\"] = \"\" # (required) Base URL of your WatsonX instance\n", | ||

| "# (required) either one of the following:\n", | ||

| "os.environ[\"WATSONX_API_KEY\"] = \"\" # IBM cloud API key\n", | ||

| "os.environ[\"WATSONX_TOKEN\"] = \"\" # IAM auth token\n", | ||

| "# optional - can also be passed as params to completion() or embedding()\n", | ||

| "# os.environ[\"WATSONX_PROJECT_ID\"] = \"\" # Project ID of your WatsonX instance\n", | ||

| "# os.environ[\"WATSONX_DEPLOYMENT_SPACE_ID\"] = \"\" # ID of your deployment space to use deployed models" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Configure LiteLLM\n", | ||

| "\n", | ||

| "Add the LiteLLM OpikTracker to log traces and steps to Opik:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import litellm\n", | ||

| "import os\n", | ||

| "from litellm.integrations.opik.opik import OpikLogger\n", | ||

| "from opik import track\n", | ||

| "from opik.opik_context import get_current_span_data\n", | ||

| "\n", | ||

| "os.environ[\"OPIK_PROJECT_NAME\"] = \"watsonx-integration-demo\"\n", | ||

| "opik_logger = OpikLogger()\n", | ||

| "litellm.callbacks = [opik_logger]" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Logging traces\n", | ||

| "\n", | ||

| "Now each completion will logs a separate trace to LiteLLM:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# litellm.set_verbose=True\n", | ||

| "prompt = \"\"\"\n", | ||

| "Write a short two sentence story about Opik.\n", | ||

| "\"\"\"\n", | ||

| "\n", | ||

| "response = litellm.completion(\n", | ||

| " model=\"watsonx/ibm/granite-13b-chat-v2\",\n", | ||

| " messages=[{\"role\": \"user\", \"content\": prompt}],\n", | ||

| ")\n", | ||

| "\n", | ||

| "print(response.choices[0].message.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

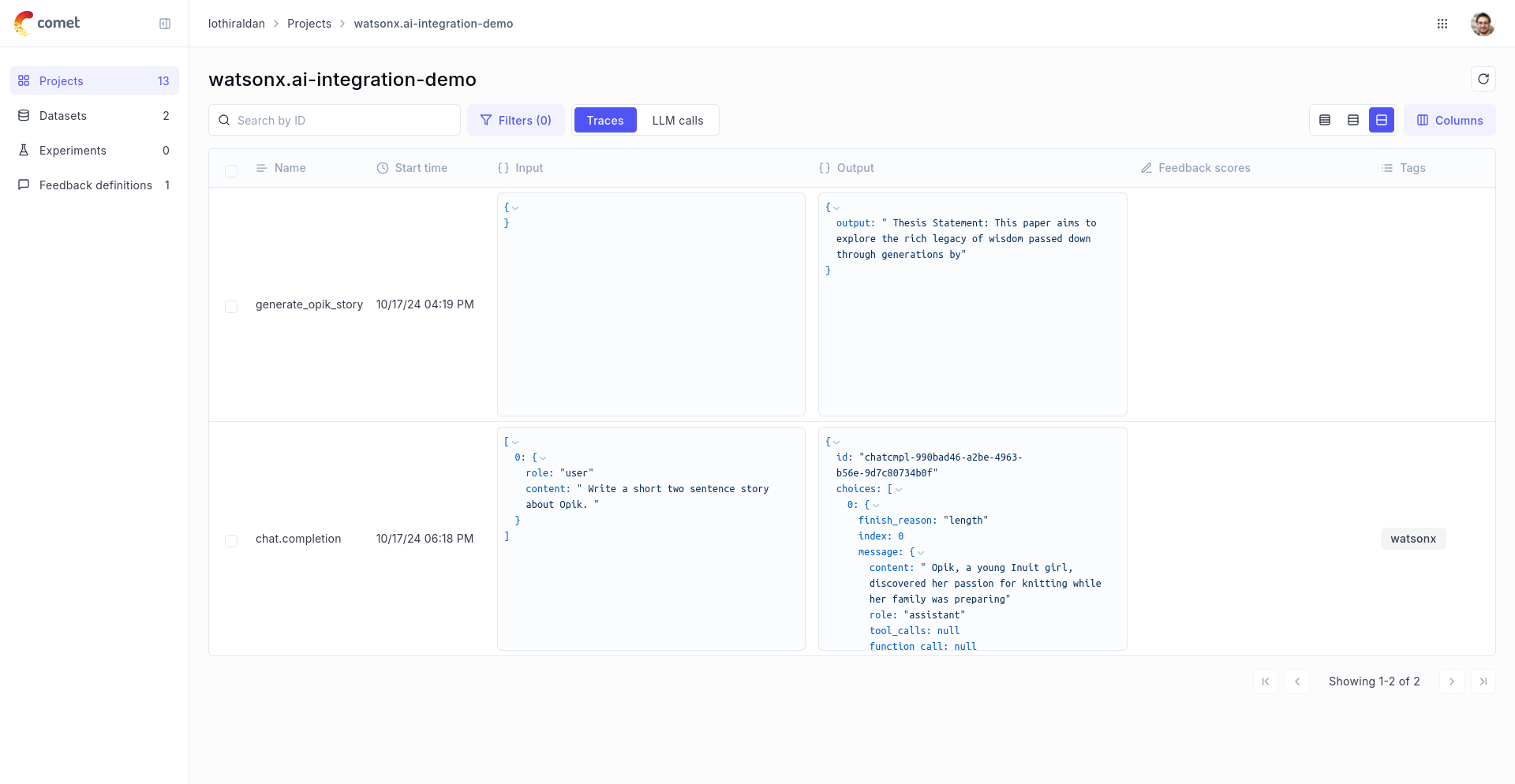

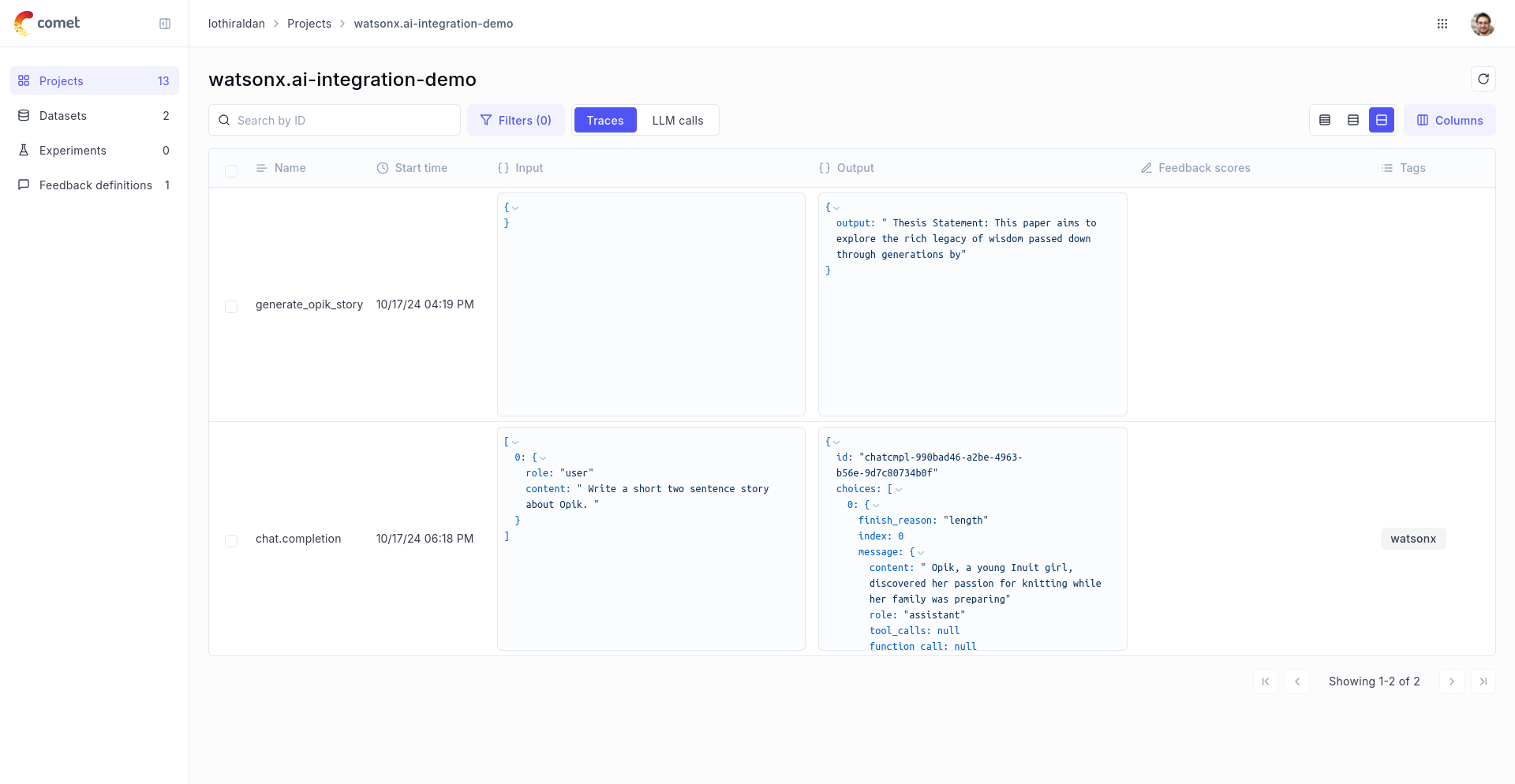

| "The prompt and response messages are automatically logged to Opik and can be viewed in the UI.\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Using it with the `track` decorator\n", | ||

| "\n", | ||

| "If you have multiple steps in your LLM pipeline, you can use the `track` decorator to log the traces for each step. If watsonx is called within one of these steps, the LLM call with be associated with that corresponding step:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "@track\n", | ||

| "def generate_story(prompt):\n", | ||

| " response = litellm.completion(\n", | ||

| " model=\"watsonx/ibm/granite-13b-chat-v2\",\n", | ||

| " messages=[{\"role\": \"user\", \"content\": prompt}],\n", | ||

| " metadata={\n", | ||

| " \"opik\": {\n", | ||

| " \"current_span_data\": get_current_span_data(),\n", | ||

| " },\n", | ||

| " },\n", | ||

| " )\n", | ||

| " return response.choices[0].message.content\n", | ||

| "\n", | ||

| "\n", | ||

| "@track\n", | ||

| "def generate_topic():\n", | ||

| " prompt = \"Generate a topic for a story about Opik.\"\n", | ||

| " response = litellm.completion(\n", | ||

| " model=\"watsonx/ibm/granite-13b-chat-v2\",\n", | ||

| " messages=[{\"role\": \"user\", \"content\": prompt}],\n", | ||

| " metadata={\n", | ||

| " \"opik\": {\n", | ||

| " \"current_span_data\": get_current_span_data(),\n", | ||

| " },\n", | ||

| " },\n", | ||

| " )\n", | ||

| " return response.choices[0].message.content\n", | ||

| "\n", | ||

| "\n", | ||

| "@track\n", | ||

| "def generate_opik_story():\n", | ||

| " topic = generate_topic()\n", | ||

| " story = generate_story(topic)\n", | ||

| " return story\n", | ||

| "\n", | ||

| "\n", | ||

| "generate_opik_story()" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

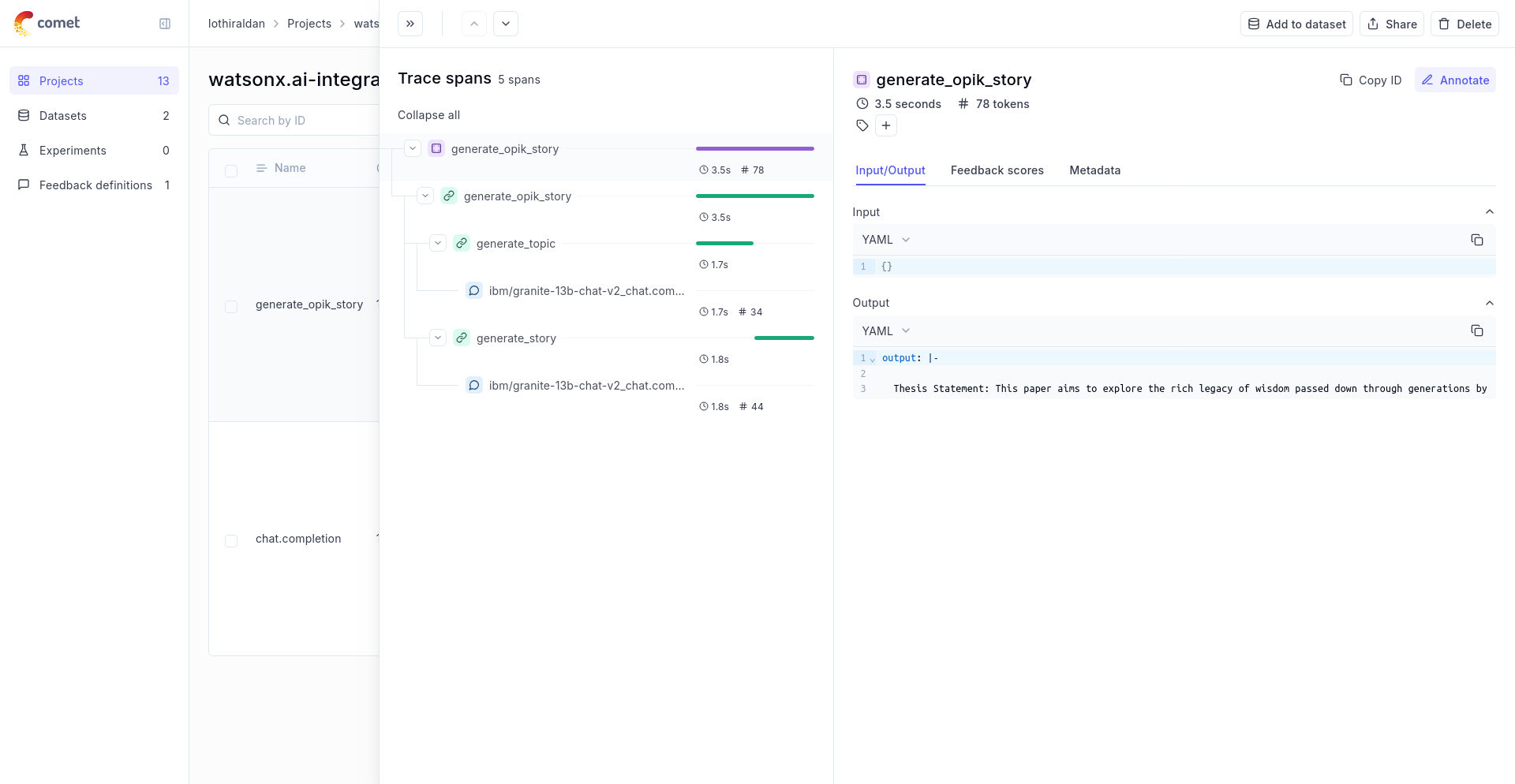

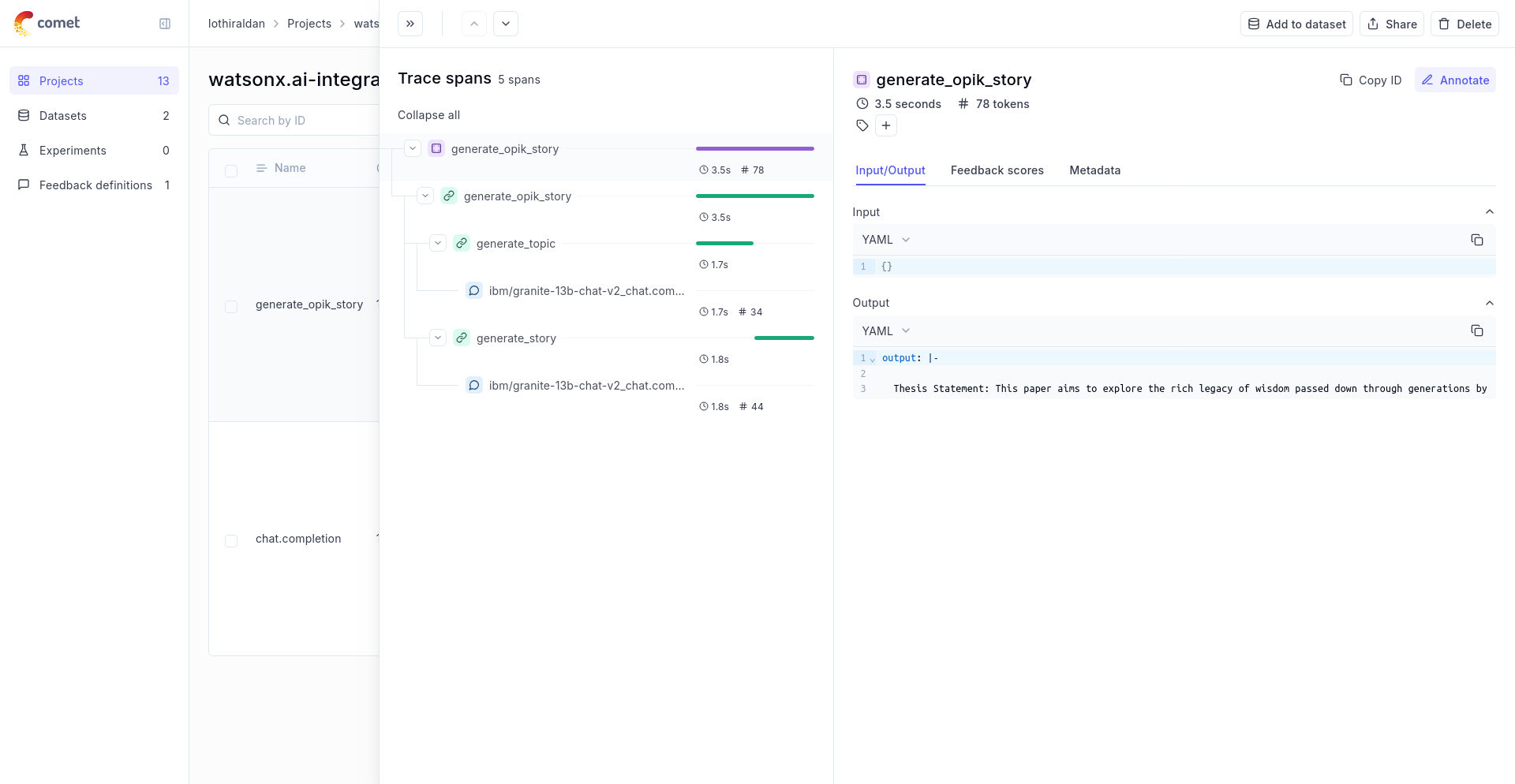

| "The trace can now be viewed in the UI:\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": "Python 3 (ipykernel)", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.11.3" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 4 | ||

| } |

127 changes: 127 additions & 0 deletions

127

apps/opik-documentation/documentation/docs/cookbook/watsonx.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,127 @@ | ||

| # Using Opik with watsonx | ||

|

|

||

| Opik integrates with watsonx to provide a simple way to log traces for all watsonx LLM calls. This works for all watsonx models. | ||

|

|

||

| ## Creating an account on Comet.com | ||

|

|

||

| [Comet](https://www.comet.com/site?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) provides a hosted version of the Opik platform, [simply create an account](https://www.comet.com/signup?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) and grab you API Key. | ||

|

|

||

| > You can also run the Opik platform locally, see the [installation guide](https://www.comet.com/docs/opik/self-host/overview/?from=llm&utm_source=opik&utm_medium=colab&utm_content=watsonx&utm_campaign=opik) for more information. | ||

|

|

||

| ```python | ||

| %pip install --upgrade opik litellm | ||

| ``` | ||

|

|

||

|

|

||

| ```python | ||

| import opik | ||

|

|

||

| opik.configure(use_local=False) | ||

| ``` | ||

|

|

||

| ## Preparing our environment | ||

|

|

||

| First, we will set up our watsonx API keys. | ||

|

|

||

|

|

||

| ```python | ||

| import os | ||

|

|

||

| os.environ["WATSONX_URL"] = "" # (required) Base URL of your WatsonX instance | ||

| # (required) either one of the following: | ||

| os.environ["WATSONX_API_KEY"] = "" # IBM cloud API key | ||

| os.environ["WATSONX_TOKEN"] = "" # IAM auth token | ||

| # optional - can also be passed as params to completion() or embedding() | ||

| # os.environ["WATSONX_PROJECT_ID"] = "" # Project ID of your WatsonX instance | ||

| # os.environ["WATSONX_DEPLOYMENT_SPACE_ID"] = "" # ID of your deployment space to use deployed models | ||

| ``` | ||

|

|

||

| ## Configure LiteLLM | ||

|

|

||

| Add the LiteLLM OpikTracker to log traces and steps to Opik: | ||

|

|

||

|

|

||

| ```python | ||

| import litellm | ||

| import os | ||

| from litellm.integrations.opik.opik import OpikLogger | ||

| from opik import track | ||

| from opik.opik_context import get_current_span_data | ||

|

|

||

| os.environ["OPIK_PROJECT_NAME"] = "watsonx-integration-demo" | ||

| opik_logger = OpikLogger() | ||

| litellm.callbacks = [opik_logger] | ||

| ``` | ||

|

|

||

| ## Logging traces | ||

|

|

||

| Now each completion will logs a separate trace to LiteLLM: | ||

|

|

||

|

|

||

| ```python | ||

| # litellm.set_verbose=True | ||

| prompt = """ | ||

| Write a short two sentence story about Opik. | ||

| """ | ||

|

|

||

| response = litellm.completion( | ||

| model="watsonx/ibm/granite-13b-chat-v2", | ||

| messages=[{"role": "user", "content": prompt}], | ||

| ) | ||

|

|

||

| print(response.choices[0].message.content) | ||

| ``` | ||

|

|

||

| The prompt and response messages are automatically logged to Opik and can be viewed in the UI. | ||

|

|

||

|  | ||

|

|

||

| ## Using it with the `track` decorator | ||

|

|

||

| If you have multiple steps in your LLM pipeline, you can use the `track` decorator to log the traces for each step. If watsonx is called within one of these steps, the LLM call with be associated with that corresponding step: | ||

|

|

||

|

|

||

| ```python | ||

| @track | ||

| def generate_story(prompt): | ||

| response = litellm.completion( | ||

| model="watsonx/ibm/granite-13b-chat-v2", | ||

| messages=[{"role": "user", "content": prompt}], | ||

| metadata={ | ||

| "opik": { | ||

| "current_span_data": get_current_span_data(), | ||

| }, | ||

| }, | ||

| ) | ||

| return response.choices[0].message.content | ||

|

|

||

|

|

||

| @track | ||

| def generate_topic(): | ||

| prompt = "Generate a topic for a story about Opik." | ||

| response = litellm.completion( | ||

| model="watsonx/ibm/granite-13b-chat-v2", | ||

| messages=[{"role": "user", "content": prompt}], | ||

| metadata={ | ||

| "opik": { | ||

| "current_span_data": get_current_span_data(), | ||

| }, | ||

| }, | ||

| ) | ||

| return response.choices[0].message.content | ||

|

|

||

|

|

||

| @track | ||

| def generate_opik_story(): | ||

| topic = generate_topic() | ||

| story = generate_story(topic) | ||

| return story | ||

|

|

||

|

|

||

| generate_opik_story() | ||

| ``` | ||

|

|

||

| The trace can now be viewed in the UI: | ||

|

|

||

|  |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.