-

Notifications

You must be signed in to change notification settings - Fork 0

Problems concerning training data generation

The biggest challenge is to gather appropriate training examples - do not underestimate this prerequisite of neural nets. Obviously composing training examples manually is very tedious. Changing inputs or outputs requires usually to compose or generate completely new data. Most likely it's the desire to have some automated training scenario, which covers the data generation. The BWAPI already grants possibilities for unsupervised execution of StarCraft bots. Getting into detail, the BWAPI lacks here and there concerning precise and valuable information. For example it is not possible to get proper feedback to a unit's attack right away. This wiki page roughly describes the problems faced while implementing the approach to train individual marines in combat situations.

These are the implemented inputs:

- Unit's hit points

- Distance to weakest enemy unit

- Distance to closest enemy unit

- Hit points of weakest enemy unit

- Hit points of closest enemy unit

- Unit: is under attack

- Overall friendly unit count

- Overall enemy unit count

- Overall friendly hit points

- Overall enemy hit points

- Close by friendly count

- Close by enemy count

- Close by friendly hit points

- Close by enemy hit points

- Far away friendly count

- Far away enemy count

- Far away friendly hit points

- Far away enemy hit points

These are the implemented outputs:

- Attack weakest (dynamic duration based on attack animation)

- Attack closest (dynamic duration based on attack animation)

- Move Back (duration: 10 frames)

The map is composed of 10 friendly and 10 enemy marines. A fitness function is employed which determines how well or how bad the new situation turned out to be. This is done by comparing the inputs before and after the taken action (meaning immediate outcome of actions). In the end that information makes up one training example which is trained right after the match concludes.

Individual units are focused. In the case of one unit just running away, whereas all the other fellow units deal lots of damage, the unit running away would consider running as good. The outcome of the enemy was bad and for the friendly units good. So the performance of individual units gets completely blurred, resulting in conflicting and unreliable training data. A 1 vs 1 scenario would ultimately mean, that most inputs and outputs are not of interest. Having one enemy makes the decision for attacking the closest or weakest unit irrelevant. The fitness function should just work with the information of relevance. So that goes for the unit's attack feedback and the unit's hit points.

The approach for the fitness function is only applicable for attack actions. During moving around, the unit can't deal damage, but it can retrieve damage. After all the situation stays the same or gets worse. A fitness function for moving back can be based on the threat which is close by and far away. If distance is gained towards the closest threat, then moving back can be complimented. Staying out of fight for no reason should be punished. The whole approach is based on immediate actions, so for the future more tactical actions and evaluations should be considered. But that requires to observe a spectrum of actions or a certain time frame. Machine Learning conducted with Go and chess, could be a role-model for this concern.

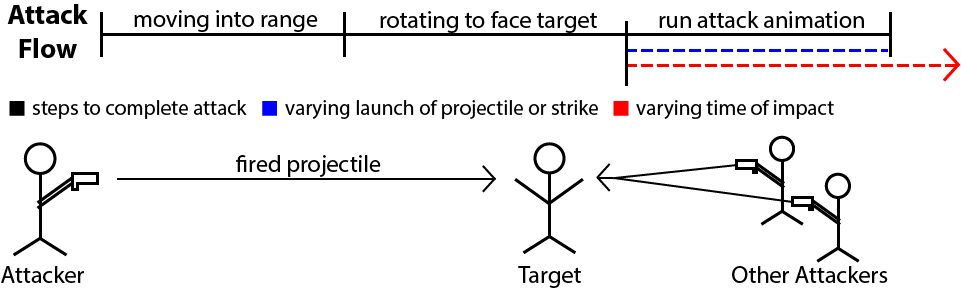

Frame-perfect attack actions can't be implemented. The BWAPI lacks in providing information about attacks. It only provides information about a unit being under attack, is attacking and is executing an attack animation. In this context frame-perfect means that a unit can alter it's action without interrupting the essential part of an action. For example, an attack is successful as soon as a strike reaches the point of hitting an enemy or a projectile is fired towards the enemy. Right after these moments a command for moving could be issued without interrupting the previous action. Waiting for the animation of the attack to end wastes several frames, which could be used for other actions like moving for a kiting strategy. So the BWAPI doesn't reveal the point of time where a projectile is launched and the impact to the enemy can't be related to its cause. The attacker could inform it's target about an incoming attack, but the target can't tell for sure if the impact was done by the attacker. There are many other attackers who could be blamed for the impact. The distance and orientation matters a lot and influences the duration of the attack animation. It is not possible to reliably predict the launch of a projectile and its impact. This leads to mismatching training data.