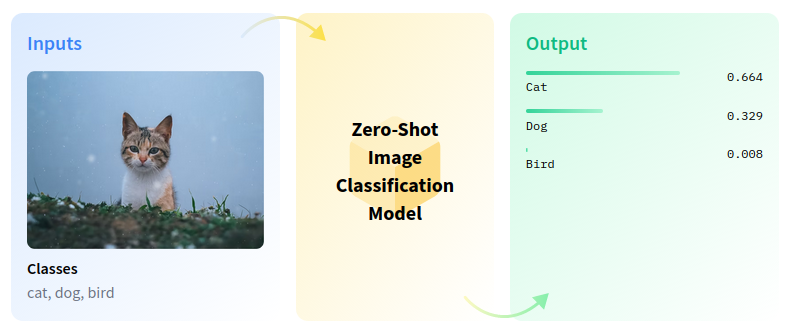

Zero-shot image classification is a computer vision task to classify images into one of several classes, without any prior training or knowledge of the classes.

In this tutorial we will use OpenAI CLIP model to perform zero-shot image classification.

In this tutorial we will use OpenAI CLIP model to perform zero-shot image classification.

This notebook demonstrates how to perform zero-shot image classification using the open-source CLIP model. CLIP is a multi-modal vision and language model. It can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task. According to the paper, CLIP matches the performance of the original ResNet50 on ImageNet “zero-shot” without using any of the original 1.28M labeled examples, overcoming several major challenges in computer vision. You can find more information about this model in the research paper, OpenAI blog, model card and GitHub repository.

Notebook contains following steps:

- Download model

- Instantiate PyTorch model

- Export ONNX model and convert to OpenVINO IR using the Model Optimizer tool

- Run CLIP with OpenVINO

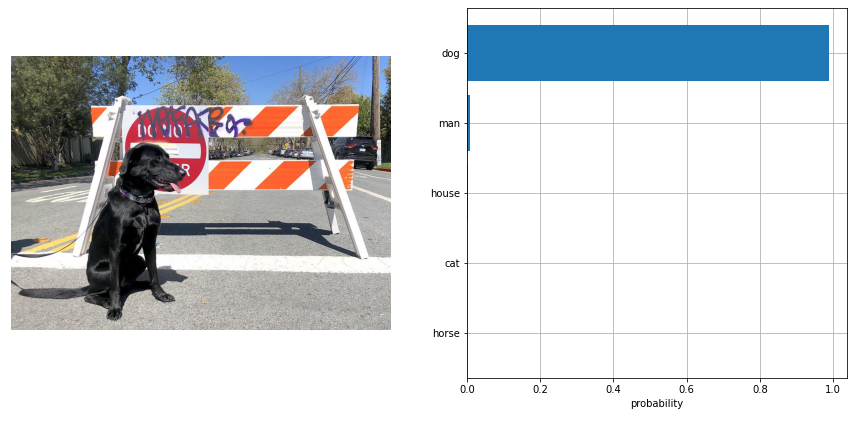

The image below shows an example of notebook work

If you have not installed all required dependencies, follow the Installation Guide.