This template shows how to use terraform to manage workspace configurations and objects (like clusters, policies etc). We attempt to balance between configuration code complexity and flexibility. The goal is to provide a simple way to manage workspace configurations and objects, while allowing for maximum customization and governance. We assume you have already deployed workspace, if not, you can refer to the parallel folder aws_databricks_modular_privatelink.

Specifically, you can find examples here for:

- Provider configurations for multiple workspaces

- Configure IP Access List for multiple workspaces

- Workspace Object Management

- Cluster Policy Management

- Workspace users and groups

If you want to manage multiple databricks workspaces using the same terraform project (folder), you must specify different provider configurations for each workspace. Examples can be found in providers.tf.

When you spin up resources/modules, you need to explicitly pass in the provider information for each instance of module, such that terraform knows which workspace host to deploy the resources into; read this tutorial for details: https://www.terraform.io/language/modules/develop/providers

In this example, we show how to patch multiple workspaces using multiple json files as input; the json file contains block lists and allow lists for each workspace and we use the exact json files generated in workspace deployment template inside this repo, see this [link] for more details.

Assume you have deployed 2 workspaces using the aws_databricks_modular_privatelink template, you will find 2 generated json file under ../aws_databricks_modular_privatelink/artifacts/, and you want to patch the IP access list for both workspaces. You can refer to main.tf and continue to add/remove the module instances you want. For each workspace, we recommend you using a dedicated block for configuration, like the one below:

module "ip_access_list_workspace_1" {

providers = {

databricks = databricks.ws1 // manually adding each workspace's module

}

source = "./modules/ip_access_list"

allow_list = local.allow_lists_map.workspace_1

block_list = local.block_lists_map.workspace_1

allow_list_label = "Allow List for workspace_1"

deny_list_label = "Deny List for workspace_1"

}Note that we also passed in the labels, this is to prevent strange errors when destroying IP Access Lists. This setup has been tested to work well for arbitrary re-patch / destroy of IP Access lists for multiple workspaces.

About the Host Machine's IP - in the generated json file, we had automatically added the host (the machine that runs this terraform script) public IP into the allow list (this is required by the IP access list feature).

We show how to create cluster from terraform clusters.tf. You can also create other objects like jobs, policies, permissions, users, groups etc.

We show a base policy module under modules/base_policy, using this you can supply with your custom rules into the policy, assign the permission to use the policy to different groups.

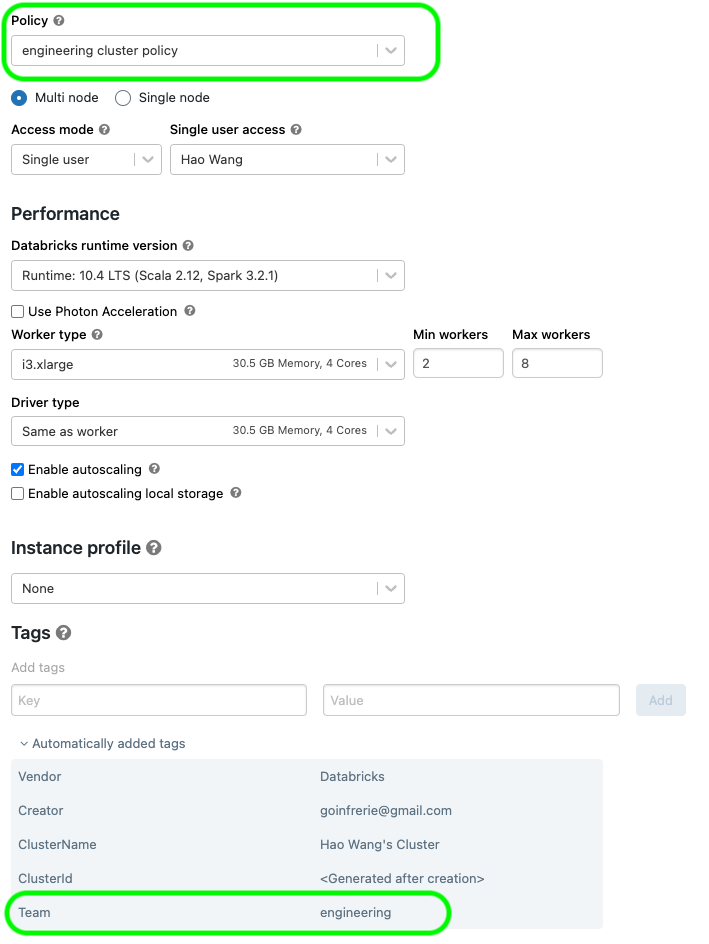

Within the json definition of policy you can do things like tag enforcement, read this for details: Tagging from cluster policy

By defining in the cluster policy json like below, you can enforce default tags from policy:

"custom_tags.Team" : {

"type" : "fixed",

"value" : var.team

}

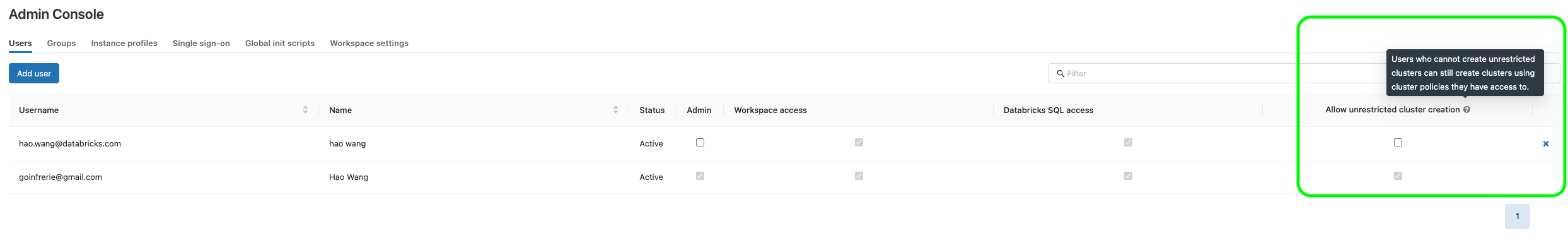

Ordinary (non-admin) users, by default will not be able to create unrestricted clusters; if allowed to create clusters, they will only be able to use the policies assigned to them to spin up clusters, thus you can have strict control over the cluster configurations among different groups. See below for and example of ordinary user created via terraform.

The process will be: provision ordinary users -> assign users to groups -> assign groups to have permissions to use specific policies only -> the groups can only create clusters using assigned policies.

You can manage users/groups inside terraform. Examples were given in main.tf. Note that with Unity Catalog, you can have account level users/groups. The example here is at workspace level.

No requirements.

| Name | Version |

|---|---|

| databricks | 1.3.1 |

| databricks.ws1 | 1.3.1 |

| Name | Source | Version |

|---|---|---|

| engineering_compute_policy | ./modules/base_policy | n/a |

| ip_access_list_workspace_1 | ./modules/ip_access_list | n/a |

| ip_access_list_workspace_2 | ./modules/ip_access_list | n/a |

| Name | Type |

|---|---|

| databricks_cluster.tiny | resource |

| databricks_group.this | resource |

| databricks_group_member.vip_member | resource |

| databricks_user.user2 | resource |

| databricks_spark_version.latest_lts | data source |

| databricks_user.this | data source |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| pat_ws_1 | n/a | string |

n/a | yes |

| pat_ws_2 | n/a | string |

n/a | yes |

| Name | Description |

|---|---|

| all_allow_lists_patched | n/a |

| all_block_lists_patched | n/a |

| sample_cluster_id | n/a |