-

Notifications

You must be signed in to change notification settings - Fork 31

ConcurrentLru

ConcurrentLru is a thread-safe bounded size pseudo LRU. This page describes how to use the ConcurrentLru, and how it is implemented.

ConcurrentLru is intended to be used as a drop in replacement for ConcurrentDictionary, but with the benefit of bounded size based on an LRU eviction policy.

This code sample illustrates how to create an LRU then get/remove/update items:

int capacity = 666;

var lru = new ConcurrentLru<int, SomeItem>(capacity);

// Get

bool success1 = lru.TryGet(1, out var value);

var value1 = lru.GetOrAdd(1, (k) => new SomeItem(k));

var value2 = await lru.GetOrAddAsync(0, (k) => Task.FromResult(new SomeItem(k)));

// Remove

bool success2 = lru.TryRemove(1);

// Update

var item = new SomeItem(1);

bool success3 = lru.TryUpdate(1, item);

lru.AddOrUpdate(1, item);The ConcurrentLru algorithm is a pseudo LRU and gives approximate LRU order - it trades exact LRU sequence for pseudo sequence to give the best possible performance when accessed concurrently by multiple threads.

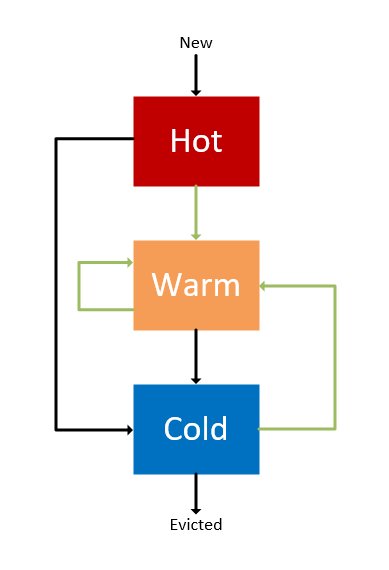

Internally, the ConcurrentLru item order is determined by three queues, similar to the Segmented LRU in memcached. ConcurrentLru is a little simpler, since it has a single item activity flag and no background thread to maintain the queue overflow. Instead queue maintenance is amortized as part of each get operation, and has very low overhead. Pure reads do not alter item order, so continuous high hit rate on the same 'hot' item is very close in performance to a ConcurrentDictionary lookup.

Items flow through the queues like this resulting in pseudo LRU order:

When an item is fetched, it is first added to the hot queue. When the hot queue is full and an item is fetched, the last item in the hot queue is moved to either warm or cold depending on whether it has been accessed since it was added. The same process is followed for the warm and cold queues. Each time an item is fetched, it is marked as touched. When the queues are cycled, touched items in the warm queue and cold queues are moved back to the head of warm. Untouched items in warm are moved to cold. Untouched items in cold are evicted.

No items are discarded until capacity is reached. This makes it straightforward to reason about maximum item count/memory usage, but remember that items will not be released if they are not accessed - no item is evicted until the number of unique items fetched exceeds capacity, at which point items are cycled through the queues until evicted. Therefore no active item in ConcurrentLru is subject to Garbage Collection.

TotalCapacity = HotCapacity + WarmCapacity + ColdCapacity

If there are a mixture of warm and cold items, the number of items present in ConcurrentLRU will be the same as the capacity argument provided to the constructor (each queue is bounded to approximately capacity/3). If no item is ever fetched before being evicted, the number of items present in ConcurrentLRU will be approximately 2 * (capacity/3). See 'Scanning' below for one possible explanation of how this might occur.

A scan is a sequence of one-time access requests. LRUs perform badly when presented with a sequential scan of every key when the number of keys is larger than the size of the cache.

The warm queue in ConcurrentLru provides some resistance to scanning when mixed into something like a Zipf distributed access pattern. However, if the access pattern is a pure sequential scan, and the scan count is greater than 2 * (capacity/3), cache miss rate will be 100%.

This is because the items are evicted before they are fetched. Below is a worked example to illustrate how this happens step by step.

Let's say we create a ConcurrentLru with capacity = 3, and fetch 3 keys in sequence twice in a row.

var lru = new ConcurrentLru<int, int>(3);

// sequential pass 1

for (int i = 0; i < 3; i++)

{

lru.GetOrAdd(i, k => k);

}

// sequential pass 2

for (int i = 0; i < 3; i++)

{

lru.GetOrAdd(i, k => k);

}Initially, the hot (H), warm (W) and cold (C) queues are empty and each has a capacity of 1 item:

H[], W[], C[]

On the first sequential pass the internal state of the queues is as follows at each step:

i = 0 H[0], W[], C[]

i = 1 H[1], W[], C[0]

i = 2 H[2], W[], C[1]

Note that item 0 was evicted when fetching item 2. On the second sequential pass there is no step where the LRU already contains the key that is requested:

i = 0 H[0], W[], C[2]

i = 1 H[1], W[], C[0]

i = 2 H[2], W[], C[1]

When the capacity is greater the same problem still occurs when all keys are fetched in an identical sequence - fetching new items pushes the old items sequentially through the hot and cold queues until they are evicted. The same problem affects the classic implementation of LRU based on linked list order when the number of scanned items is equal to the LRU size, so this is a general weakness of LRU algorithms.

In most practical applications, a sequential scan is not the primary access pattern, so this is not a significant limitation. MemoryCache given the same access pattern will likely load all values into memory. This results in a different and potentially worse problem: unbounded memory growth and potential failure due to out of memory.