-

Notifications

You must be signed in to change notification settings - Fork 43

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Analyse Uber SDC accident #16

Comments

|

Would be super if we could also get full dataset from different SDC prototypes (including from Udacity, Waterloo, MIT and other universities) driving in similar conditions to see if current sensor technology is enough (I assume it is) to detect a moving target (person with a bike) at 50m while driving at 60km/h, then notify the safety driver that an obstacle is in range (FCW), automatically switch to long beam range to allow long range cameras to see better and slow down while approaching the target to increase the chances to stop or avoid impact. We should also try to see how recent ADAS system behave in those conditions, in Tesla, Volkswagen, etc. If anyone has access to this kind of cars (SDC or advanced ADAS) please share your investigations and tests. This is the minimum that should have happened in the accident scenario, would be good to see how cars (as many as possible) with this feature activated would have behaved: Forward Collision Warning (FCW) Systems "However, if an obstacle like a pedestrian or vehicle approaches quickly from the side, for example, the FCW system will have less time to assess the situation and therefore a driver relying exclusively on this safety feature to provide a warning of a collision will have less time to react." |

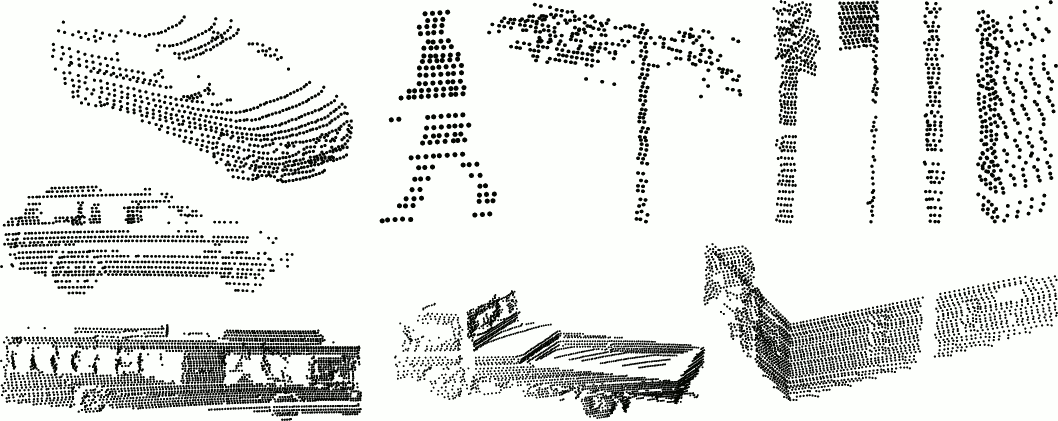

A nice SDC related dataset from a Velodyne HDL 64E S2 LIDAR:Project page: LIDAR scans: Videos: Lets see how visible is a person (or similarly shaped object) at around 50m and beyond. |

|

To make it easier for everyone to understand the accident scenario, would be good to produce videos like these from real and simulated LIDAR scan: Virtual scan of a pedestrian with a bicycle |

|

The blog entry to this video is here: http://www.blensor.org/blog_entry_20180323.html |

|

See the comments on this video, looks like Volvo XC90 has the best illumination between the 3 cars tested: Volvo XC90, MB GLC & Audi Q7, night drive We'll need to find if Uber car had active/dynamic beam mode on, that should have improved a lot the illumination range. |

|

@mgschwan would be possible to convert some of the scans from 2rt3d dataset from above, to view them in your tool? Also would be good to have similar videos with simulations at 25m and 50m for easy comparison. Please add a bounding box with dimensions around the person+bike so we get a better feel of the scale and compare easier the point cloud. |

|

@mslavescu the data is generated by our sensor simulation software. It can export to PCD point cloud data and be imported into any software that can read PCD files. The visualization was done with Blender itself, no specialized tool was used. The colored pointcloud was generated with pcl_viewer from the pointcloud library. I can do a scan at 25m and 50m, no problem, but I was wondering if there is some more information about the scene to make it a bit closer to reality?

|

|

This shows how bad is the video from Uber dash cam, here it is compared with another video in the same road spot, similar night conditions: |

|

@mgschwan here is a picture with the Uber crash location, I added the Google StreetView link to the issue description: |

National Transportation Safety Board Office of Public Affairs (NTSB) current report (3/21/2018):https://www.ntsb.gov/news/press-releases/Pages/NR20180320.aspx Developments on Tuesday include: |

Velodyne HDL 64E S2 dataset (contains 152 pedestrians and 8 bicycles):http://www-personal.acfr.usyd.edu.au/a.quadros/objects4.html This object dataset was collected from the robot Shrimp (left), which has a Velodyne LIDAR. It spins around at 20Hz to produce a dynamic 3D point cloud of the scene. The dataset consists of 631 object instances segmented from Velodyne scans of the Sydney CBD. A few instances are shown below. |

VeloViewPerforms real-time visualization and processing of live captured 3D LiDAR data from Velodyne's HDL sensors (HDL-64E, HDL-32E, VLP-32, VLP-16, Puck, Puck Lite, Puck HiRes). An introduction to features of VeloView is available as a video https://www.paraview.org/Wiki/VeloView Code and datasets: |

|

Velodyne sample datasets (including from HDL 64E) |

The Ford Campus Vision and Lidar Data Set:http://robots.engin.umich.edu/SoftwareData/Ford The vehicle is outfitted with a professional (Applanix POS LV) and consumer (Xsens MTI-G) Inertial Measuring Unit (IMU), a Velodyne HDL 64E 3D-lidar scanner, two push-broom forward looking Riegl lidars, and a Point Grey Ladybug3 omnidirectional camera system. Current data sets Paper: Videos: |

The University of Michigan North Campus Long-Term Vision and LIDAR Dataset (contains pedestrians too)http://robots.engin.umich.edu/nclt/ Sensors: Velodyne HDL-32E LIDAR Paper: Video: |

|

Video with 2 cameras: Here is a review of the camera used: It has a 1/3" 3 Mpix Aptina CMOS sensor with 2.0V/Lux-sec Low Light Sensitivity |

|

I now made a more extensive simulation |

|

To model this accident, you would want to consider the following: a) Velodyne range starts at a little over 100m depending on reflectivity. At that point there is some curve to the road but the left lanes should be visible. |

|

Thanks @bradtem and @mgschwan ! If they used 64E I think the full scan rate is 20Hz. We can use simulated data for now, hopefully they will release the raw data at some point. Beside breaking in this situation I think by turning a bit to the left would have completely avoided the impact, which happened on the right side of the car, while the pedestrian was moving towards right side. A human driver may have reacted differently, may have turned right instead, but software should be able to the calculate the optimum path considering all the surrounding objects (static or mobile). We'll need to find an accurate way to simulate different scenarios. CARLA simulator may be an option, not sure how good is their car model and physics engine though. If you know better options (open source if possible) please list them here. |

|

I think the 20 Hz is only available in the latest revision of the HDL-64e, please correct me if I am wrong. |

|

HDL-64E S2 from 2010, has 5-15Hz scan rate: HDL-64E S3 from 2013, has 5-20Hz scan rate: Not sure which one was mounted on Uber car, and at what scan rate were they computing. @mgschwan could you please do a video at 15Hz and 20Hz also. |

|

I did a simulatio at 20Hz which reduces the angular resolution from the default 0.1728 degrees (at 10Hz) to 0.3456 degrees. At a distance of 50 meters this makes a difference, at 25 meters it is still quite noticeable but definitely enough points to detect it. At 12.5 meters there are enough points to descern the bike from the person. |

|

Thanks @mgschwan! How does BlenSor compare with CARLA LIDAR sensor, which seems it is supports at least HDL-32E (since yesterday): This is HDL 64E S3 manual: And here is the list of angular resolutions at different scan rates supported by HDL 64E S3 (at least since 2013): An interesting discussion about angular resolution of LIDAR: LIDAR also has limitations on angular resolution just as a function of how the sensor works. It's entirely possible that the size of the person/bike on LIDAR was just too small until it was too late to stop. |

|

Comparison between Velodyne Models - VLP-16, HDL-32, HDL-64: VLS-128 seems to be much better but it was just released (last year), I couldn't find the manual or specs, but these articles are representative: Velodyne’s latest LIDAR lets driverless cars handle high-speed situations 128 Lasers on the Car Go Round and Round: David Hall on Velodyne’s New Sensor |

|

A proper recreation also should involve the slight upward grade of the road, which may affect the line density on the target. Scans at 25 meters are interesting but not too valuable, in that this is the stopping distance at 40mph, and so an obstacle must be clearly visible well before that. In fact, it is generally desired to begin breaking even earlier to avoid the need for a hard full brake. This makes 50m a good distance to consider. At 10hz, you get one sweep every 1.7m, and you want a few sweeps for solid perception. As such, early detection by 35m is good and 50m is even better. |

|

This is a nice video with DARPA 2007 challenge data: Visualization of LIDAR data @mgschwan how can we generate a 360° video with LIDAR cloud points (colored by distance) from car level in BlenSor? Even live with possible interaction to be viewed in VR systems (Oculus, Vive or Google Cardboard like). @bradtem to summarise a few points:

This last point it makes very important to have adjustable beams and use them on long range and reduce the range/brightness and lower the angle while getting closer to an opposite car, in such a way that the combined beam would be enough. Same applies to animals, especially when very silent electric cars will be deployed. Or the wind covers the car noise. We need also elevated long range beams to see stop signs or other traffic signs. |

|

Yes, you have 3 seconds to impact from 50m. However, generally impact is not your goal! To avoid impact, you must hard brake before 25m. To avoid hard braking it would be nice to start much earlier, like 40m. Which is fine, you get 6 sweeps at 10hz from 50m to 40m, and then you actuate the brakes. However, for the pure emergency situation, you can consider starting at 35.5m, get 4 sweeps on your way to 25m, and then hard brake on dry pavement. So with a 400ms perception/analysis time, you really want to perceive before 36m. |

|

@bradterm Do we have any info about the upward slope. I cant get that info from google maps. But if the slope remains constant up to the pedestrian it would hardly change the output as the car would already follow the slope. |

|

@bradtem I agree, for full stop before the impact the car needs to act (start to break) at around 25m. @mgschwan I found this topo map, may help a bit: And this one: |

|

The Tempe weather on March 18, accident happened at 10PM: There was no wind around 10PM, temp 15C, humidity 25%, visibility 16KM: |

|

Here is a scan from a distance of 34 meters I can't do an animation in VR, but Sketchfab has a VR mode. So if you have a headset you can step into the scan |

|

@mgschwan thanks! |

|

Looks good, and confirms what has been said by Velodyne itself and everybody else. No way this LIDAR doesn't readily spot the pedestrian. Now we have to wait for more data to see what failed. |

|

A very nice video with object detection: Motion-based Detection and Tracking in 3D LiDAR Scans I'll try to see how we can run this kind of algorithms in BlenSor and CARLA Simulator. Beside of understanding and reproducing the Uber accident scenario (more accurate when we get the car onboard sensors data), this exercise would help us build tests and simulate different (edge case) scenarios for OSSDC Initiative. |

|

This look quite promising as well https://www.youtube.com/watch?v=0Q1_nYwLhD0 But I think 3 year old papers are already out of date in this fast moving field. If you got working implementations of those algorithms, getting simulated data from BlenSor into them probably works through a series of PCD or PLY files. Alternatively I think I can create a ROS Bag file to replay them for algorithms that work directly as a ROS node |

|

@mgschwan how hard would be to write a ROS LIDAR/camera publisher in BlenSor? That way we could easily create ROS bags and use it in live scenarios also. Take a look at CARLA (https://github.com/carla-simulator/carla) and TrueVision (https://www.truevision.ai/) simulators, both based on Unity, if we could build similar features in a fully open source simulator, based on Blender (with BlenSor as base) would be super! Here is a more recent work with neural nets based detection: Vote3Deep: Fast Object Detection in 3D Point Clouds Using Efficient Convolutional Neural Networks We will try to integrate something like this in Colaboratory, like we did with other CNN based image detection/segmentation methods: Try live: SSD object detection, Mask R-CNN object detection and instance segmentation, SfMLearner depth and ego motion estimation, directly from your browser! |

|

Thanks @bradtem for this article: How does a robocar see a pedestrian and how might Uber have gone wrong? |

|

Here is the link to a bag file with the 20Hz simulation. http://www.blensor.org/pedestrian_approach.html Can anyone with a running obstacle detection algorithm check if the bag file works. I created it inside ros docker container, so I can't run a GUI to check if the data makes sense |

|

A great update by @bradtem:

|

|

Some extra details here:

|

Collect datasets from similar situations and analyse them to help everyone understand what the car should have seen and what could have been done to avoid the accident.

Help us obtain the dataset from Uber SDC involved in the accident, at least 3 min before and 1 min after the impact (this is a reply to the police tweet with the video from the accident):

https://twitter.com/GTARobotics/status/976628350331518976

A few initial pointers to accident info:

The Google Maps StreetView link where the accident happened:

642 North Mill Avenue, Tempe, Arizona, USA

https://goo.gl/maps/wTDdCvSzc522

Brad Templeton analysis of the accident:

https://twitter.com/GTARobotics/status/976726328488710150

https://twitter.com/bradtem/status/978013912359555072

Experts Break Down the Self-Driving Uber Crash

https://twitter.com/GTARobotics/status/978025535807934470?s=09

Experts view on the fact that LIDAR should have detected the person from far away:

https://twitter.com/GTARobotics/status/977764787328356352

This is the moment when we decide that human lives matter more than cars

https://www.curbed.com/transportation/2018/3/20/17142090/uber-fatal-crash-driverless-pedestrian-safety

Uber self-driving system should have spotted woman, experts say

http://www.cbc.ca/beta/news/world/uber-self-driving-accident-video-1.4587439

IIHS shows the Volvo XC90 with a range just under 250 feet (76 meters) with "low beams" on!

https://twitter.com/GTARobotics/status/977995274122682368

Help us get current companies that test SDC to provide datasets from their own cars in similar situations as the accident:

https://twitter.com/GTARobotics/status/977773180344512512

Lets also capture current SDC sensors configurations/specs in:

https://github.com/OSSDC/OSSDC-Hacking-Book/wiki

Join the discussions on OSSDC Slack at http://ossdc.org

The text was updated successfully, but these errors were encountered: